AI Demos are Monster Movies

Every week or so there’s some new AI demo and a bunch of people on Twitter and in my group chats working themselves up into a frenzy about AGI being right around the corner.

My response is usually along the lines of “Oh neat, it’s another chatbot with some new quirks and a couple new gimmicks bolted on.” Such statements do not make me popular.

AI demos are like monster movies. Really, modern AI hype in general is a big monster movie. You never get to look at the whole monster in plain daylight, you only ever see brief glimpses of tiny parts of it in poor lighting. Everything else is implied, your imagination filling in the rest. These films would be vastly more tame if it wasn’t for the fact that the monster in your imagination is always far scarier than any silicone prop could ever be.

The big difference between AI and a monster movie is that in a monster movie, the people making the film know that the monster isn’t real and aren’t trying to warn the public about the immense danger posed by the thing they caught on film. This is less of the case in AI. Machine learning models are described and treated as black boxes, and little effort is spent trying to understand what it is they're doing. The AI is a neural network, so no one knows how it works, and so people proceed to let their imagination run wild with what it could be doing and avoid learning anything about what the network is actually doing that might kill the magic.

There certainly is some effort going into fields like Mechanistic Interpretability to approach this problem, but progress is fairly slow and the problems I've heard people trying to tackle in that space sound less like pragmatic day-to-day tools and more like extremely ambitious pipe dreams that try to solve everything all at once. There's likely a lot of low-hanging fruit that's being ignored here, and I suspect that there is also an immense financial incentive to not invest in these tools for the sake of maintaining the magic and continuing to hype up investors and frighten the general public.

None of these AI tools have taken over the world yet, and I have yet to see one that has really lived up to the hype. I remember talking with tech friends during the early days of GPT-4 where they all were claiming that this new high-quality natural language interface would suddenly make it extremely easy for anyone to get an AI to do whatever they pleased. Google would soon be bankrupt because of how much better GPT-4 is at answering questions. A week later the conversation had shifted to the difficulty of getting good answers and how much of a skilled profession prompt engineering will soon be, and a few of them are now building AI search startups and complaining frequently about how extremely difficult it is to keep the AI from hallucinating fake answers to your questions.

These AI models would appear far more mundane if we actually had good tools for peering into these neural networks. If every new AI model is “going to be AGI this time, trust me guys!” and none of them is yet, maybe our ideas about how this progression toward better and better AI isn’t very accurate, and maybe peeking under the hood might inform us as to why we haven’t gotten there yet. It’s worth remembering that many of these LLMs are consuming many millions of times more text than any human could consume in a lifetime and yet they still aren’t obviously AGI. Something is very obviously wrong regardless of how people try to rationalize it.

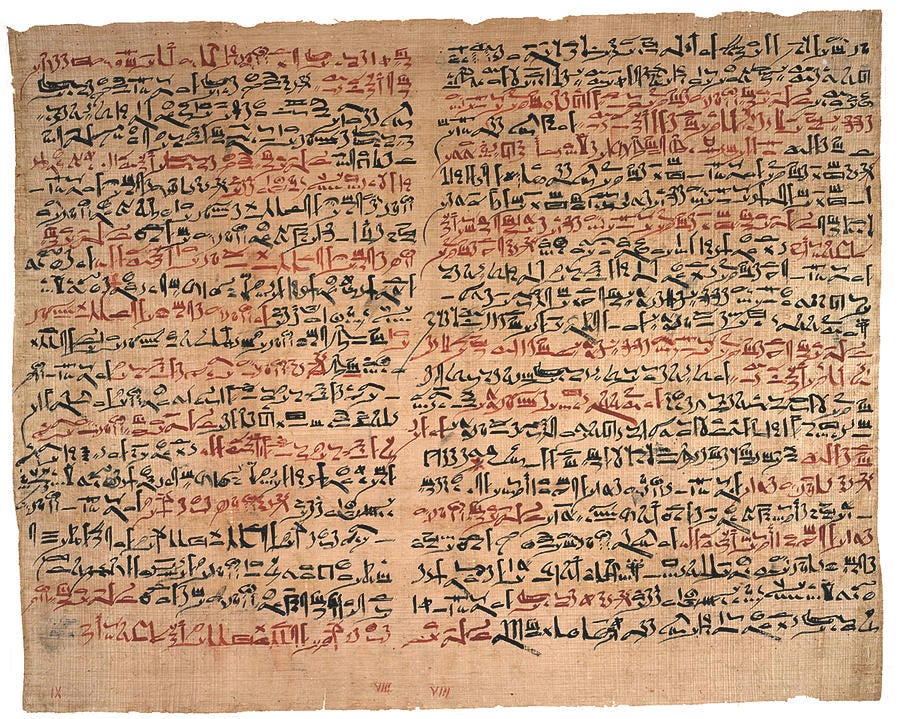

Even if we build tools that aren’t sufficient to give us a perfect picture, we could probably still piece together a great deal of what is going on. The ancient Egyptians had a pretty decent idea of what most organs in the body do (with perhaps some misunderstandings about the heart and brain) without any of the modern scientific knowledge or tools we have today.

Frankly, this pessimistic approach to the interpretabilty of neural networks really isn’t even that unique in computer science. A very similar thing is going on with popular misunderstandings of undecideability. Turing’s original proof stated that the set of program states that encode proofs about the properties of other program states is a subset of all possible program states, and that therefore there exist some states that a computer can be in for which no corresponding proof exists. The conclusion is that a program that can correctly answer questions about properties of general code with 100% accuracy cannot exist, and that a program that can answer such questions perfectly can be used to create logical paradoxes and contradictions.

This is often misinterpreted as meaning that such questions are completely unanswerable in all cases, which is false. Look at these two pieces of code here. Can you answer whether each one will halt? If so, is your brain actually solving a logically impossible problem, or is it merely that this problem is not as impossible as it is often claimed?

i = 99;

while(i > 0){

println(i, " bottles of beer on the wall");

println(i, " bottles of beer");

println("take one down, pass it around");

i--;

println(i, " bottles of beer on the wall");

}// underage version

i = 99;

while(i > 0){

println(i, " bottles of beer on the wall");

println(i, " bottles of beer");

println("take one down, put it back up");

println(i, " bottles of beer on the wall");

}The reality is that even if there are some programs that cannot be reasoned about, there still are many programs that can, and that problems that are actually useful to humans are often easy to model with fairly well-behaved programs. The harder ones often just spit out near-random noise, and are usually not worth paying attention to unless your name is Stephen Wolfram. You can build tools that can look for certain types of common structure that is easy to reason about - Abstract Interpretation is one such tool. Not all valid programs will have that structure, but most that you care about will! If you have a program that proves hard to analyze, that might be a better indication that you have a bug than it is that you’re doing something tricky.

If static analysis tools got as much attention as other development tools do, if we had as many hobbyist programmers building abstract interpreters as we do building their own languages, and if some of them actually gained adoption the way that hobby languages sometimes do, we’d likely be living in a completely unrecognizeable world with an extremely different outlook on what is and isn’t possible and feasible in software development. Very likely a much more desirable world than ours.

Perhaps machine learning is in some ways a trickier problem. These models are not directly designed by humans and likely use many weird tricks that we don’t yet understand, let alone know how to build tools to thoroughly reason about for us. In other ways though, it’s provably easier; these models are finite, they have a finite running time, there are no infinite loops or unbounded tapes that introduce impossible problems into the math. There are some interesting ideas around viewing AI models geometrically that I suspect will prove to have a lot of merit, though perhaps will need some time to develop. At the very least, it’s something that is fairly general-purpose and well-connected to already rich and mature mathematical tools.

I’m really not afraid of some scary AI monster. Maybe there will be things worth worrying about someday, but it seems much more likely to me that everyone is just lost and scared and seeing things. If we open the black box, if we turn on the lights, if we take off this monster’s mask, I think we’ll find it’s a lot less scary than we imagined.

I’m trying to put out more regular articles now, even if they’re a bit shorter. I have another article mostly finished that should hopefully be out this weekend, and a couple more in the pipeline for next week. Thanks for reading, and stay tuned!