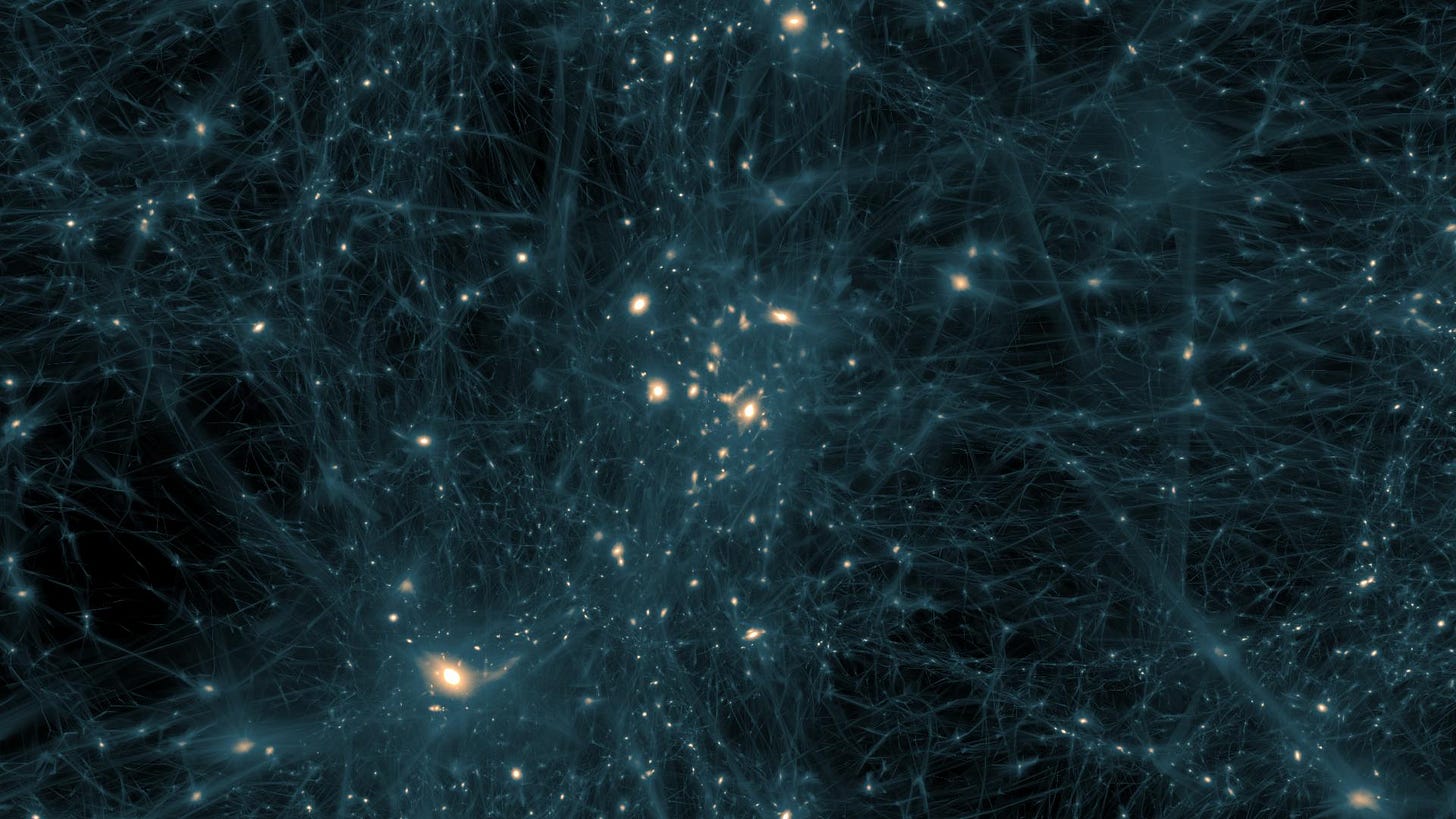

Algorithmic Dark Matter

In physics, Dark Matter is a mysterious gravitational influence in the universe - perhaps a particle, perhaps some anomalous property of gravity. We cannot currently measure these particles or forces directly, but we can infer their existence indirectly through the effects they have on the matter in the universe that can be measured.

Computer science is a rather strange field. In many ways, Turing machines, circuits, and combinators hardly resemble any familiar mathematics at all. Yet we occasionally see hints of deeper structure that does appear more familiar. For example, one of the first major proofs in applied mathematics that required high-dimensional geometry was a proof of Claude Shannon’s equation for the maximum capacity of a noisy channel, something deeply fundamental to information theory and computation.

More modern examples might be how topology has gone from being an abstract sibling of geometry with few real-world applications to being an extremely powerful tool in data analysis; it turns out that thinking of datasets as high-dimensional structures is an incredibly fruitful perspective, and topology provides many useful tools for making sense of this structure without getting lost in fine details. Another example worth mentioning is that nearly all of modern cryptography is based in group theory, a branch of mathematics originally developed for understanding symmetry.

Then there are some other examples I’ve mentioned in the past:

We are getting hints here and there that there is a much deeper, likely more natural and more geometric way of understanding computation, one with a much more obvious connection to the vast space of mathematical ideas we’ve spend millenia developing.

And even then that’s just the tip of the iceberg. That’s just computation that we’ve discovered so far. There are in fact many loose threads, signs of vast branches of computer science that are either undiscovered or underexplored. This is Algorithmic Dark Matter, algorithmic tools that we can infer must exist, but that for various reasons are currently largely beyond human comprehension.

I’d like to discuss a few of these loose threads here.

Superoptimization

The job of a compiler is to convert human-readable code into machine code that the computer can more directly understand. The job an optimizing compiler is to not only do this, but to make sure that the resulting machine code does its job as efficiently as possible, even if it does not directly correspond to the original code.

The way this is traditionally done is with a combination of handwritten analysis passes and rewrite rules - check for certain properties and assumptions that can be made about the code, and then apply whatever transformations can be safely made given those assumptions that [hopefully] make the code faster.

A trivial example might be converting the code x * 2 into x + x, as addition tends to be much faster in hardware than multiplication.

The downside of this approach is that any transformation that can be applied to your code that is not directly accounted for by the compiler developers simply will not be applied, potentially leaving a significant amount of inefficiency in your program.

One alternative approach, albeit one that has been largely relegated to academia, is called Superoptimization. There are many different variations on this approach, but the general idea is to treat code optimization as a search problem, attempting to replace a piece of machine code with the fastest possible sequence of CPU instructions that can be found that does the same thing.

There are some fundamentally hard problems involved here, and in practice you can never really get something truly optimal (especially when loops are involved), but you can pretty reliably get code that not only beats state-of-the-art optimizing compilers like LLVM and GCC, but even can beat handwritten assembly by a significant margin.

Superoptimizers have a notorious quirk however; they often produce code that is utterly bizarre. The reason why they produce code faster than what any human can write is because they use techniques no human would ever have thought of.

The most efficient code humans can think to write is limited by the techniques we have so far imagined and learned. If someone discovers a new technique for optimizing machine code tomorrow, many existing code fragments can be replaced by more efficient variants using this new technique. What stops that code from existing is merely the limits of human understanding.

A superoptimizer ignores this limitation entirely, presenting you instead with the most efficient machine code it could find. If that requires sticking arcane bitwise operations into the middle of your floating-point code, it will do so. It doesn’t care at all whether or not any human who ever lives will ever have the slightest idea of why it works, it just spits out the most efficient code it finds.

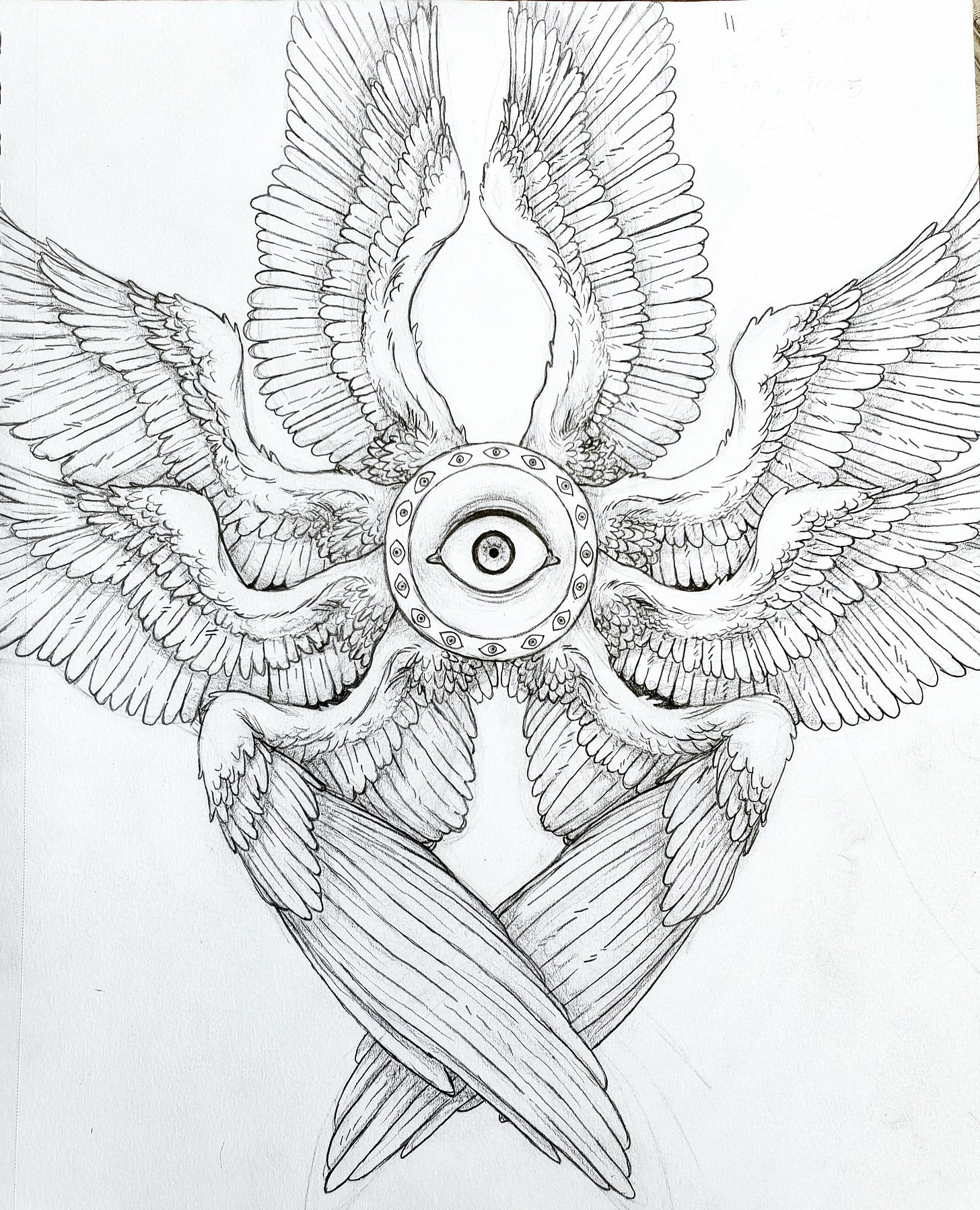

This reminds me of Biblically Accurate Angels - while some angels referenced in Abrahamic scriptures do resemble the typical depictions of winged humans with robes and halos, there are occasional mentions of other divine beings that more closely resemble something from a Lovecraft story. Many memes have been made in recent years around this - bizarre beings that are difficult to comprehend, covered in wings, eyes, wheels, and flames, or at least presumably features that an ancient person might mistake for such things.

The implication here that even ancient people understood is interesting - that the closer something gets to perfection, the more counter-intuitive and harder to comprehend it gets. This is certainly what we observe with superoptimized code.

The NP Complexity Anomaly

As I’ve discussed in previous articles, the P vs NP problem is not only a difficult, unsolved problem, but it is a truly bizarre problem. Many efforts to solve it have simply ended up instead producing proofs stating that our most powerful mathematical tools are useless against it. It is also extremely fundamental, close to the deepest parts of mathematics, logic, and computer science, and so its implications extend absolutely everywhere.

Many common problems in mathematics and computer science can be boiled down to problems most naturally solved by a Nondeterministic Computer, the subject of this mathematical puzzle. We have algorithms for solving such problems, but the ones that are easiest for us to reason about are not very efficient, and the most efficient known algorithms are very difficult to reason about.

When I say “difficult to reason about”, I don’t mean that the code might be hard to understand - often it’s really not much more than a fancy depth-first search. Rather, the strange behavior comes from interactions with a complex mathematical space whose structure we really don’t understand.

For example, these algorithms are often considered to have exponential time complexity, which is rarely considered to be practical. With such exponential growth in difficulty, it would seem reasonable to say that solving small problems with more than a couple dozen variables should be hopelessly impossible, even for the most powerful supercomputers. Even quantum computers give us little hope here.

Yet, many “real-world problems”, often with thousands or even millions of variables, can be feasibly solved in practice. Relatively tiny, hopelessly hard problems certainly exist, but what determines whether or a problem is easy or hard seems to have very little to do with its size, and instead some other factor that is at the moment largely unknown. What exactly constitutes a “real-world problem” is not yet formally defined, and so far any attempts to formally define it have always resulted in counterexamples.

These algorithms are not some esoteric set of problems either, unrelated to practical concerns. They in fact show up constantly, often in the form of optimization problems, or related to the behavior of complex systems (code correctness, etc.). We largely don’t address these problems not because they don’t matter, but because we're often scared off by our assessment of how difficult they are, an assessment that is blatantly full of holes.

When we do address them, we often just turn to heuristics and other “good-enough” solutions which often make such problems more tractable. These certainly have their place and will continue to be widely used, but I expect they’re largely distracting us from many deeper mysteries that could open up new tools we currently cannot imagine.

There are some interesting interpretations of algorithms for these problems that involve things like data flowing through circuits in unusual ways - flowing from outputs back to inputs for example. There are some interesting similarities to quantum computing with properties analogous to superposition and entanglement. This all hints at strange new algorithms and techniques.

I plan to write much more on this subject in the future, though getting into more specific details requires some very technical deep-dives.

Neural Computing and Error-Correcting Computation

Arguably the best places to look for finding bizarre new branches of computation is at systems like biology. There are many technical feats that we cannot achieve with even our best technological efforts, things we would likely have labeled impossible if it were not for the fact that our own bodies frequently serve as existence proofs that such feats are in fact possible.

One good example of this is the brain, which frequently accomplishes tasks that our best software tools cannot yet match despite decades of effort (general intelligence), as well as tasks that we can only replicate with deep learning systems that we largely do not understand (object recognition, etc.).

More specifically, we can look closely at the principles that seem to govern low-level brain circuits. When we do so, we often wind up with strange systems that seem to in some ways vaguely resemble familiar tools such as error-correcting codes, but applies them in very unfamiliar ways.

While the union properties of Sparse Distributed Representations offer some fascinating possibilities - something I plan to go into much more depth on once I can build some visualization/simulation software that can truly do it justice - perhaps a simpler example might be the unusual error-correcting properties that seems to be a fundamental part of neural computation.

Traditionally, error correction is done on data rather than computation. After information is generated, it is reformatted to add extra redundancy in a way that cleverly makes certain types of common forms of digital corruption easily fixable. Error correction on the computation itself is never really considered, and data formatted for error correction always is converted back to its original form before any further computations are performed.

In the brain, we can mathematically model neurons like bubbles in a high-dimensional space, a very familiar model to anyone who’s delved deep enough into error correction. The actual state we are encoding is the center of the bubble, and any noise introduced is a deviation from the center. If we receive an input that doesn’t lie exactly at the center of such a bubble, we know some error has occured, but we can also easily pull the error back to the closest bubble center to correct the error to its most likely original value.

The brain seems to do something much like this error correction process in every operation. It also tends to break many of our traditional rules, such as keeping these bubbles from overlapping, which seems to be a big part of how it’s able to make such a system flexible enough to compute with rather than merely storing and transmitting unchanging data.

One potential application of this, assuming we humans can not only learn these techniques well enough to understand them, but well enough to engineer with them, would be in making much more efficient computers; existing transistors are still hundreds of atoms across, and far from fundamental efficiency limits.

Smaller transistors can be made, but often run into problems caused by the quantum tunneling of electrons through gates, which increase the odds that a transistor will produce an incorrect result. With modern chips with tens to hundreds of billions of transistors flipping billions of times per second, even extremely tiny odds of failure could crash a computer within seconds, necessitating transistors much larger than theoretically necessary.

If computers were capable of tolerating noisy gates however through some type of error-tolerant logic, computers could likely be made that are significantly more efficient than existing machines.

Deep Learning and Geometric/Topological Computation

While there are many capabilities of the brain that human engineering cannot currently match, there are also many that fall into a strange middle ground. We do have tools that can replicate some of the capabilities of the brain, but many of these tools are inscrutable black boxes that we ourselves largely don’t understand yet.

In terms of our ability to engineer such capabilities, tools like Deep Learning are certainly helpful. In terms of understanding the principles behind how such things are actually accomplished, and reasoning about how they work and where their limits might be, we are largely clueless at this point.

This doesn’t have to be the way it is though. In fact, deep learning is full of things we do understand pretty well, such as calculus and linear algebra, and there’s good evidence that it’s deeply connected to many other well-studied subjects, such as guage theory, group theory, algebraic topology, and mutual information theory.

Existing efforts to reverse-engineer deep learning are often also well-suited to really interesting visualizations as well.

If we can better understand the mechanisms being modelled by these artificial neurons, such knowledge would likely translate into more reliable and trustable neural networks, could provide more efficient ways of training such networks, and could perhaps inspire entirely new types of algorithms and computational techniques that could be used in more traditional programming contexts.

A deeper understanding of DL would also help us better navigate societal ramifications of the technology - we know right now that it’s capable of a lot of things, but we have very little of an understanding of what it isn’t capable of. This results in wild speculation and nonsense flooding public discourse, along with the public discourse equivalent of screaming “fire” in a crowded theater that is the fearmongering about killer AI.

Biochemical Computing

Synthetic biology and biotech are fields that seem to move exceptionally slowly. Despite having sequenced genomes and now having apparently cracked protein folding, progress is still rather slow. We largely don’t understand biology, let alone how to influence it with anything but crude chemical tools with long lists of side effects.

I have a suspicion that, much like the brain, biology is using some bizarre computational trickery that we’re simply not familiar with, which is the real limit on our understanding. This is certainly contrary to what I’ve heard from people working in biotech - their view is that there are few if any general principles behind biological systems, that every rule has a counterexample, and that these systems are just fundamentally hard to understand.

One simple observation I’d point out though is that the complex behavior of biological systems are all at the end of the day merely chemical reactions. Such reactions tend toward equillibrium, and computing such equillibria tends to be computationally hard in the general case. Often NP-complete or harder.

Wait, NP-complete?

Biological systems not only have to solve many real-world problems that turn out to be in NP and related complexity classes - optimization problems with resource allocation and body structure, game-theoretic behavior strategies, etc., but its most fundamental tools for implementing the logic behind this play by the same rules. Rules that, as we discussed earlier, are currently largely in a theoretical blind spot when it comes to human understanding.

If we attempt to use computers to find optimal structures for physical objects that need to handle certain stresses, we often wind up with very organic-looking objects, resembling bones or trees. This “organic-looking” property often comes with a great deal of complexity. What’s more likely, that biology is hard-coding every little detail of such structures in its genome while still fitting it into often just a few megabytes of non-junk-DNA and having plenty of room left over for all the other things it needs to do? Or that it’s encoding an optimization algorithm for computing such structure on the fly?

My suspicion - and I’d be very shocked if this isn’t true - is that biology’s bread and butter consists of these dark NP algorithms implemented by a chemical system, evolved specifically so that the equillibria of such chemical systems correspond directly to solutions to the hard problems biological systems face.

Further, these computations will be largely continuous due to dealing with chemical concentrations more often than discrete bits. I also wouldn’t be surprised if the harsh and chaotic nature of the world has also caused these systems to evolve self-correcting computations similar to that of the brain.

All of this also leads me to the conclusion that the techniques that drive biological systems are likely not just relying on a lot of Algorithmic Dark Matter, but on a combination of multiple different types of Algorithmic Dark Matter that I’ve discussed so far. It could certainly be that biology has even more tricks than this.

It could certainly be that there are few if any real unifying principles behind biology, but biology heavily using Algorithmic Dark Matter likely doesn’t make it any easier on us. I have no doubt that illuminating some of this dark computation with more research would at the very least make the vast range of biological trickery we must master to advance biotechnology much easier to comprehend.

Quantum Computing

While I’m rather bearish on quantum computing hardware - something to be discussed in depth another time - there clearly are new, powerful computational tools that are only possible given quantum superposition and entanglement.

It’s not even clear we need working quantum computers to find valuable new tools here. Classical computers can simulate quantum algorithms just fine - they’re just slow. However, much like aforementioned NP problems, they often can be much faster in practice than in theory.

Most quantum computations are best represented as operations on exponentially-large matrices - however, there are certain regularities to these matrices, as well as the fact that they are often very sparse matrices where most terms are zero, terms that therefore need to be neither stored nor operated on.

Not only are these operations faster in practice than they might at first appear, but there are many constraints quantum computers have - for example not being able to observe the quantum state without collapsing it - that a classical simulation of a quantum computer is completely immune to, providing even more degrees of freedom.

The result? Not only is there a lot of unique algorithms specific to quantum computers, but there are many variations unique to classical simulations on quantum computers. While this might not seem the most useful, the interesting ideas in the quantum computing space so far might set us on a pathway toward some very peculiar algorithms and new techniques for solving a variety of hard problems.

Not only that, but quantum systems are notoriously unfamiliar - how many problems are out there that we haven’t realized yet could be solved with such tools?

What Else is Out There?

These are the most obvious loose threads to me, though I’m certain this is truly only the tip of the iceberg.

Each of these seems to imply the existence of an entire paradigm of computing beyond merely the form of mundane classical computing that we humans tend to spend most of our time playing in.

To continue one of the points I started this article with, computer science is a very odd branch of mathematics, though one full of hints of hidden connections to more conventional mathematics. I suspect the computer science of the future will look extremely different from that of today, and that the field we currently know is a mere accident of history full of ideas that will seem contrived in retrospect.

Our models of computation are also largely a product of exactly how we understand the world around us, and how we in particular reason about it. To some extent that’s probably a byproduct of how our brains work, but a large portion of it is also likely a byproduct of culture. Our tools largely shape the way we think, and our mathematical and computational tools likely have their own effects like those proposed by the Sapir-Whorf hypothesis.

If existing computer science largely reflects the human mind and our current cultural sensemaking tools, what effect would radically changing our understanding of computation have as it ripples back? What happens when we uncover and illuminate all this Algorithmic Dark Matter, exposing a vast range of new paradigms of computation capable of things we currently can barely imagine? How does that influence our thought?

I hope you enjoyed today’s article. I’ve generally been hinting at these ideas for a while, though I figured I needed an article to directly address it.

I’m planning on putting an article out soon on quantum/reversible computing, as well as an article on interpreting human social systems from an information theory/cryptography perspective, with some potential applications for addressing some modern social problems, and a tangentially related article discussing why humans tell stories. No promises on when or in what order though, articles come out when they come out.

I also want to do a series of articles soon to provide intuition for the structure of NP-complete problems and algorithms for efficiently solving them. Some of this will involve some perspectives I myself have found in my own work. I also intend to continue the Neural Computing series, but the next installment of that will require me to write a lot of simulation and visualization code, so that will take a while.

I also am probably overdue for another Bzo dev log, and there’s a decent amount to report there.