Bzo Devlog #3: Euclidean Programming, Interactive Compilers

In case you’re new here, some background on this project:

Correctness

Correctness is an interesting problem in programming, but one that often is not well-defined. Sure, different classes of behavior can be labeled as "bad" and certain mechanisms can be built to avoid them. Many types of correctness just boil down to "make sure X doesn't happen"; X might be an exception, or out-of-bounds memory operations, memory leaks, etc.

Entire languages are sometimes built around these kinds of correctness problems. Rust attempts to prevent many types of memory problems with minimal runtime costs (though Rust developers do understate or at least underestimate opportunity costs when evangelizing). Of course, Rust's borrow checker takes a lot of getting used to and produces a lot of friction, and as soon as you have a correctness problem that doesn't easily reduce down to something the borrow checker can handle, Rust stops helping.

Some weird functional languages have more elaborate approaches to correctness, using type systems based on refinement types or dependent types. The basic idea there is that you may have a variable X that's an int, but there's some decoration on top of that type that says that it's an {int | x > 0}, or an int that's constrained to always be greater than zero. If you have a function that assumes that one parameter won't be negative, this is a type system that's sufficient to handle it. You can prevent divide-by-zero errors in a similar way. If you can apply such constraints across multiple variables, you can handle much more complex forms of correctness, as is done by tools such as Abstract Interpreters.

Unfortunately, if you want to be able to provide such constraints via compile-time type checking, you need something much more powerful than most type systems. This is especially true if you want the constraints you decorate your types with to be more complex than mere integer intervals. Very quickly, proving that these constraints hold requires full-blown mathematical proofs. Producing such proofs automatically is possible, but it's generally exponential time and can slow down compilation times on already-slow-functional-compilers by a lot. Luckily, once a proof is found it can be stored to avoid such expensive searches in the future (assuming the code doesn't change enough to break it). The other alternative is having the programmer write such proofs themselves.

I don't think it's strictly impossible for a mainstream programming language to get away with requiring developers to write formal logical proofs that their code is correct, but doing so would require some creative approaches that I don't see any signs of in existing languages. Maybe some of the interactive parts of Idris and similar languages are a small step in the right direction.

I've personally spent a little time building my own mathematical proof assistant as a side project (it's far from usable right now) - the basics of how such tools work aren't too hard, but I've so far found getting used to existing tools like Coq and Lean to be a frustrating experience. Not only that, but if I'm going to mess around with computational tools for building mathematical proofs, I'd prefer if those tools actually leveraged computation in interesting ways, such as providing visualizations, simulations, and proof synthesis as parts of the basic toolbox. Alas, I haven't seen any projects like that for existing proof asistants that impress me, so if such tools are going to exist it looks like I'd have to build them myself eventually. In that case, building the whole stack myself just seems like the easier option.

Luckily, there's some overlap between my plans for Bzo and what would be necessary to build such a proof assistant, so I don't think such an effort would be a waste at all; quite the contrary.

Euclidean Programming

Perhaps the solution is that we need a different approach to correctness. Increasingly elaborate type systems are closer to epicycles than a real viable solution. We should try to break out of that paradigm and find a different approach.

One observation that we can make is that type systems are very tied to the existing abstract syntax tree; adding types to code doesn't change the structure of the code, but rather just adds a layer on top, decorating each variable, expression, function call, etc. with a little bit of extra data. This is something that is legitimately useful for solving a wide variety of problems, but for more general correctness problems that involve complex relationships between many different and distant pieces of code, it’s hard to see how it could be a natural approach.

Let's take a brief look at Classical Mathematics, such as Euclidean Geometry. This is a very old branch of mathematics - one of the oldest - and one that is very heavily focused on proving statements. Many of the insights into this process that it pioneered - the use of axioms, etc. - are now the foundation of modern mathematics. It's also worth noting that Euclid lived 2500 years ago, in a world where people understood the world in a largely very different world than us. We may be influenced by them to a large extent (or at least, influenced by the tiny proportion of their ideas that have survived the millenia), but reading a few actual texts from the time quickly makes it clear that it was in many ways a very unfamiliar world to our own.

Unfamiliar places are often the best places to look for inspiration.

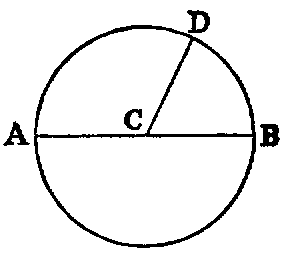

Euclidean Geometry concerns itself with points, lines, circles, angles, and polygons, embedded on a plane. It also provides a small list of simple inferences that can be made, those being the core five axioms, but also a few other "common notions" such as the transitive property of equality and some basic rules around adding and subtracting angles.

Proofs often start with some drawing of the shapes we want to prove our claim on, but end covered in other lines, circles, and other mathematical scaffolding. The proof is from one perspective a sequence of basic inferences, but in another way is an additional structure layered on top of the original problem that has the property that there exists a tree of inferences, each of which follows in a simple way from a combination of previous inferences and parts of the problem's definition.

I personally like to phrase many different problems as a form of infrastructure. I find that I get a lot of mileage out of such framings. Not in the sense that we need some kind of government program to fund it, but in the sense that many complex problems are solved in the real world not by some pure exponential brute-force search, but by building a form of scaffolding around previously-found solutions to gradually map out the structure of a complex space. Human knowledge is built on top of old knowledge in something akin to a big DAG.

In our case, we're trying to build up knowledge about a computer program. So perhaps rather than force this knowledge graph to conform exactly to the structure of the existing code, we allow it to take its own form.

At the current moment, this is largely a lot of speculation, though speculation that is based on some of the most pervasive properties of mathematics going back thousands of years. It’s also something that I hope to actually experiment with over the next couple months, and I’ll report back in a future devlog on how this experiment turns out.

Beyond the paywall, I’ll discuss some of the progress I’ve made on Bzo over the past few months, involving compiler interactivity, parsing, as well as what this paradigm of Euclidean programming may look like in practice, assuming the experiments work out.

Keep reading with a 7-day free trial

Subscribe to Bzogramming to keep reading this post and get 7 days of free access to the full post archives.