Geometric Constraints on Computing

It’s convenient to think of computing as operating in some ethereal realm, disconnected from physical reality. Cyberspace maybe. This of course is false, and the physical reality underlying computing plays a significant role in constraining computation.

I’d like to highlight a few physical constraints that apply to computation. Modern microprocessors - CPUs especially - are hilariously wasteful when it comes to how they use transistors, and as a result contain many other features that limit performance long before many of these limits become relevant to their full extent. As Moore’s Law grinds to a halt and further performance gains become dependent on squeezing out these inefficiencies, these constraints will become increasingly more relevant and more visible.

Distance is Latency

To state perhaps the most obvious, the speed of light is finite. It may seem very fast, but is much slower when you're operating at gigahertz speeds. Sending signals a few millimeters across a chip can easily take several clock cycles and sending signals across a CPU motherboard to RAM can easily take hundreds. Modern CPUs attempt to execute many instructions at a time, even on a single core, and so waiting on a piece of data that's inconveniently far away will regularly waste thousands of instructions worth of work, forcing the CPU to stall and patiently twiddle its thumbs.

How far data must travel and how many possible locations it can be tansported to also impacts power consumption. In some cases such as fiber optic communication this may be negligible, but optical communication within a microprocessor has extremely limited practicality and passing electrons through wires with capacitance and resistance depedendent on their size is the regular way in which on-chip communication is done. If a piece of data is being routed between locations, and there are two possible destinations, some amount of computation must also be performed to send it along the correct path, and larger numbers of possible destinations increases this cost further. Extremely flexible circuits that can shuffle data to an extremely wide variety of locations will generally be much less efficient than circuits with more limited and hard-coded paths.

Volume and Entropy

Also obvious, storing information takes up space. This goes for memory cells, but also goes for logic. A circuit, to perform some computation, must store some number of inputs, some number of outputs, and the states of some number of intermediate gates and wires that usually add up to something much larger than the inputs and outputs combined. Plug your inputs into a circuit and the gates and wires collectively navigate a large state space, gradually walking their way toward a stable equillibrium state from which the output can be easily derived. All of this entropy must be embedded in physical space.

To combine this point with the previous one, this happens to mean that there is a physical limit to how much memory and compute can be accessed within a certain amount of time; only so many memory cells and circuits that can be reached by light or electrons within a certain time limit. If you wish to push the physical limits of computing, you may have to consider tradeoffs between memory and compute resources and the time it takes to evaluate. Accessing more memory or greater compute resources necessarily requires more circuit volume, and will necessarily take greater amounts of time to propagate signals to every destination they must go to to compute a solution.

Having a greater deal of certainty about what you're looking for can narrow this; this is what caches do. By relocating frequently-used data to be physically closer, or on a larger scale physically sorting data based on the expected likelihood it will be used, average latency and travel distances can be greatly reduced. How well this works is of course highly dependent on how good you are at judging future usage patterns, which can be extremely dependent on the program. A program that pulls random items from a large hash table is going to have very different limits from a program that relies heavily on a few small data structures or consistently takes well-worn and predictable walks through its data.

Caches are also fundamentally driven by heuristics, and what heuristic is best is often dependent on the particular application. More complex heuristics also of course require more compute and memory, and therefore are working against you when pushing limits. Modern CPU caches try to aim for heuristics with the widest applicability, but certainly would be inferior to something that at least has the option to include a bit more information that may be provided by the compiler or programmer. Tracking usage of individual cache lines like modern caches do creates overhead in the form of additional entropy that must be stored, lowering memory density. Locating memory based on its "address" rather than its physical location also creates additional indirection; the cache must not only account for every possible arrangement of bits we wish to store in it, but also must simultaneously handle every possible physical arrangement of that data that the cache supports, and all the entropy required to encode that.

All in all, the physical layout of data in space matters tremendously.

Geometry of Parallelism

A small and unimpressive circuit that is sufficiently flexible can perform computations as elaborate as a much larger circuit, simply given more time. Given N bits that exist over T time steps, there are 2^(N*T) possible states. Doubling the core count expands the state space just as much as running the same cores for twice as long. Encoding a larger circuit to be emulated requires additional entropy in the form of CPU instructions and instruction memory, which must also be stored and regularly fetched.

However, suppose we have two tasks we can run in parallel. If task A takes N time steps to evaluate and task B takes M time steps, running the two tasks on a single core should ideally take N+M time steps (ignoring aforementioned factors like memory latency). If we have two cores, we know that these both take up physical space and cannot occupy the same location. Sending a message from one to the other takes time, dependent on how far they are apart. If we decide to send task B to another core, the round trip of communicating with that core should be less than N time steps or else it will take longer to send B off to run in parallel and wait for the result to come back than it would be to simply evaluate A and B sequentially.

If we have many different tasks with complex dependencies, perhaps we have some flexibility in latency with regards to N and M; how quickly these two specific tasks are evaluated may depend less than the sum total time it takes to evaluate some thousand other tasks as well. On the other hand, it's also perfectly possible that there are bottlenecks in the dataflow of our program and some critical path between N and M does matter. Different programs behave differently.

Programs also often consist of many different abstractions, with big functions calling smaller functions, and those functions calling yet smaller functions. Any of these functions, all of them, or perhaps none of them, may contain some opportunities for parallelism. There is potential for parallelism at all scales of a program; zooming into functions and the functions they call, and the functions those functions call, etc. leads to you to smaller and smaller tasks and sub-tasks that may be shuffled to different cores or execution units. Parallelism fundamentally has a fractal structure, and how much parallelism is available at each scale impacts how far tasks can be sent across a chip or a data center before latency costs defeat any benefits.

Memory locality also matters; it may in fact be much better to send a task to another core if that core is located closer to relevant memory. How much this matters depends on the volume of memory that must be moved around and how far it must be moved.

Curvature of Computation

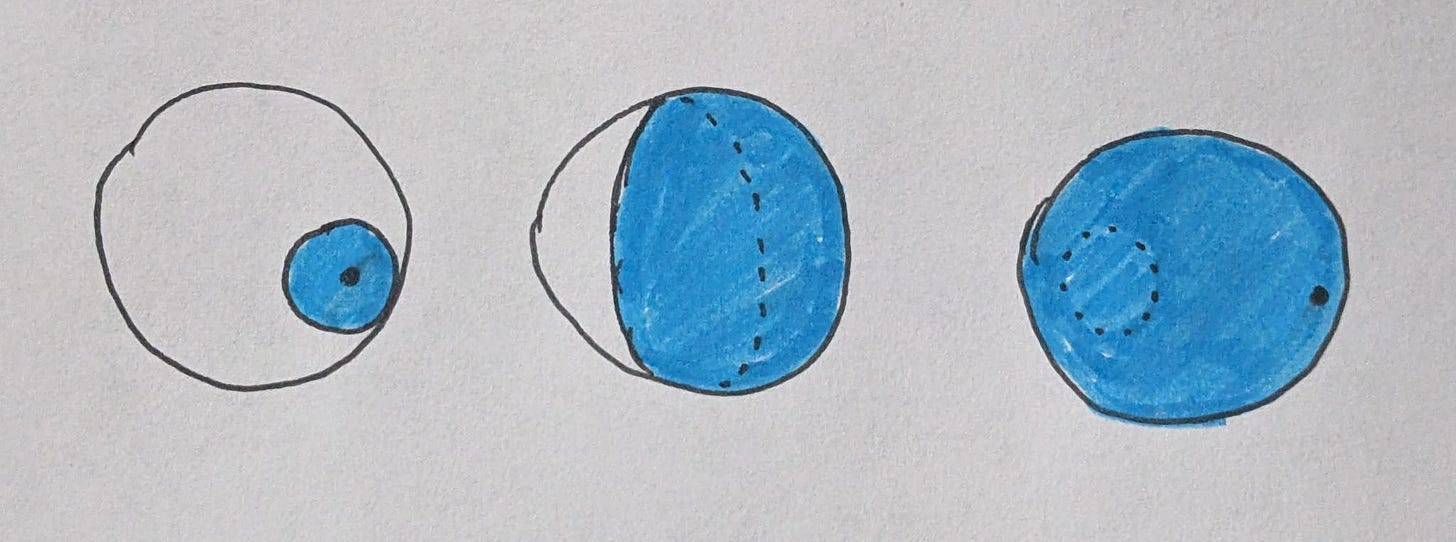

Not all spaces are flat, some spaces have curvature. The three main types of curvature are positive (elliptic/spherical) curvature, zero (euclidean/flat) curvature, and negative (hyperbolic) curvature. One important property of these spaces is that the volume within a certain radius is dependent on their curvature. In flat, euclidean space, the volume of a circle or sphere is dependent on the radius to the power of the number of dimensions; r^2 for 2D, r^3 for 3D, etc.

Pick a point on the surface of a sphere however and ask how much surface area is within a certain distance from that point. It increases up until the distance is equal to a quarter of the sphere's circumference. Past that point, its rate of growth slows until the distance is equal to half the sphere's circumference, in which case every point on the sphere is within the distance. Hyperbolic space, even stranger than the other two, sees volume increase exponentially as the radius is increased.

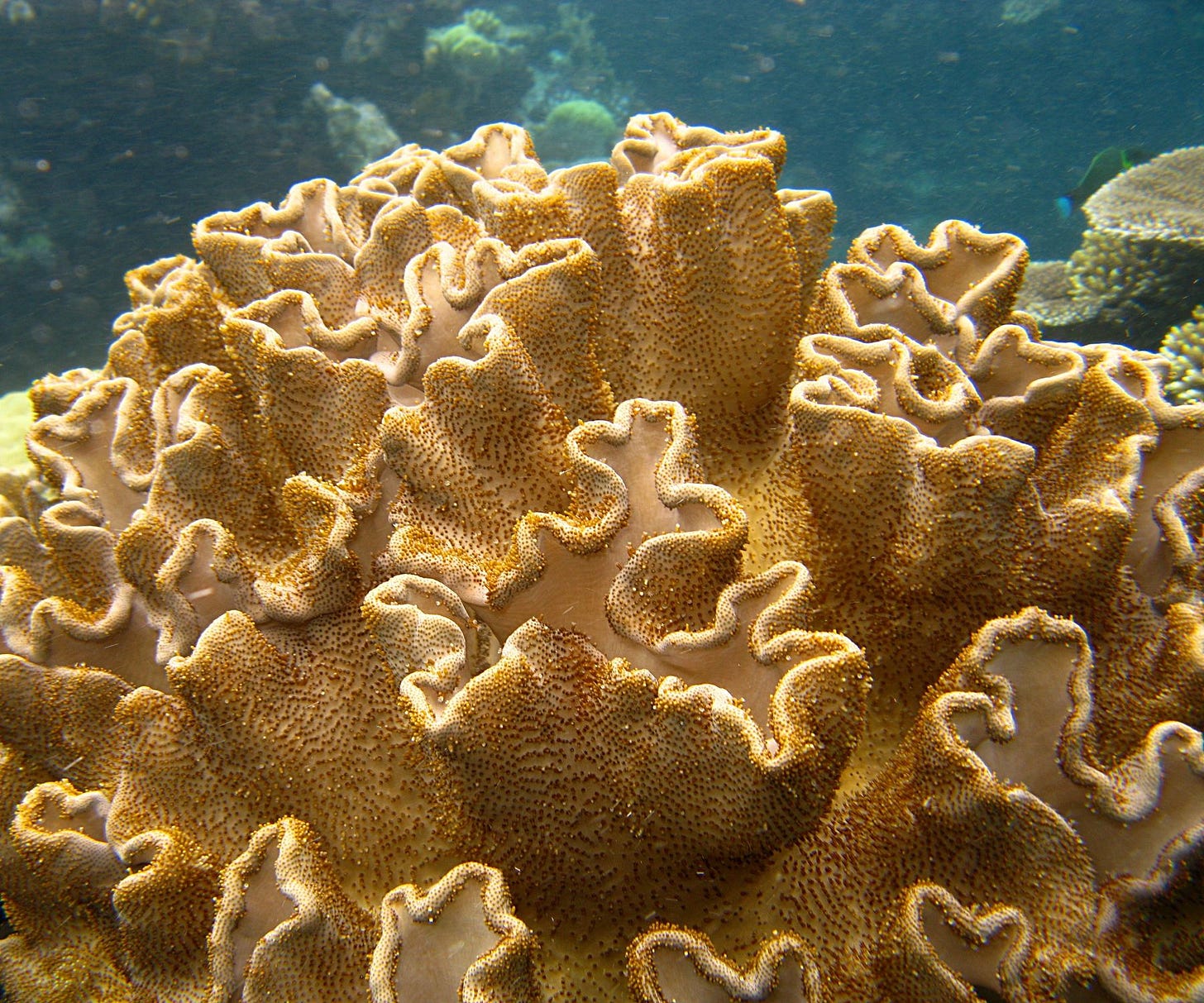

Why this matters is that it affects the relationship between volume and distance on a chip. I don't expect we'll be getting real spherical or hyperbolic computers any time soon, but many computations we perform on the chips that we do and will have are in a certain sense curved. A tree data structure is in many respects a discrete form of a hyperbolic space, with an exponentially large number of nodes accessible within a given number of hops. While it might be convenient to think of a tree as always requiring logarithmic time to locate a node, a large enough tree will become bottlenecked on the physical scaling limits of memory in euclidean space, and eventually converge to the same scaling rates; a processor with 2D memory forced to store a large enough tree will eventually converge to ~O(√n) time to locate nodes.

Many other algorithms and data structures scale in similar ways, and the dynamics by which the amount of computation and intermediate data grows and shrinks throughout evaluation is a complex geometric object that must be embedded in and confined to the limits of the lattice of memory cells and logic circuits that make up a computer.

Square-Cube Law, Heat, and Bandwidth

Take any object and scale it up. Make it twice as tall, twice as long, twice as wide. Its volume has increased by a factor of 2^3, or 8. Its surface area however has increased by only a factor of 2^2, or 4. The ratio of volume to surface area has doubled, and this has a profound impact on many properties and processes involving this object.

The amount of heat that can be stored within an object is proportional to its volume, but the rate at which it can expel this heat to the outside world is proportional to its surface area. If you have a 3D circuit and decide to scale it up, you eventually hit limits where the chip must be limited in how much work it can do (and therefore how much heat it can generate), or face building up heat too fast and melting.

Heat is of course just waste entropy, and the rate at which we can move useful entropy (information) in and out of the processor is bound by the same constraints. A sphere of computronium has bandwidth with the outside world that is ultimately bounded by its surface area. This decides how much information and heat can go in and can go out.

Manufacturing 3D circuits is difficult, error-prone, and expensive. 3D memory has been around for a while now, and the beginning of 3D stacked logic will likely be reaching consumer devices by the end of this decade. However, chips already generate large amounts of heat and are finding it increasingly difficult to operate without producing too much. Some efficiency gains can be made from the reduced distances between gates possible by moving from 2D to 3D, but this is a small reduction compared to the large increase in heat from more densely packing gates. While memory circuits can keep heat output low thanks to how infrequently most memory is accessed, logic circuits will likely be forced to stay close to 2D to keep the scaling of computing and cooling as closely coupled as possible.

Conclusion

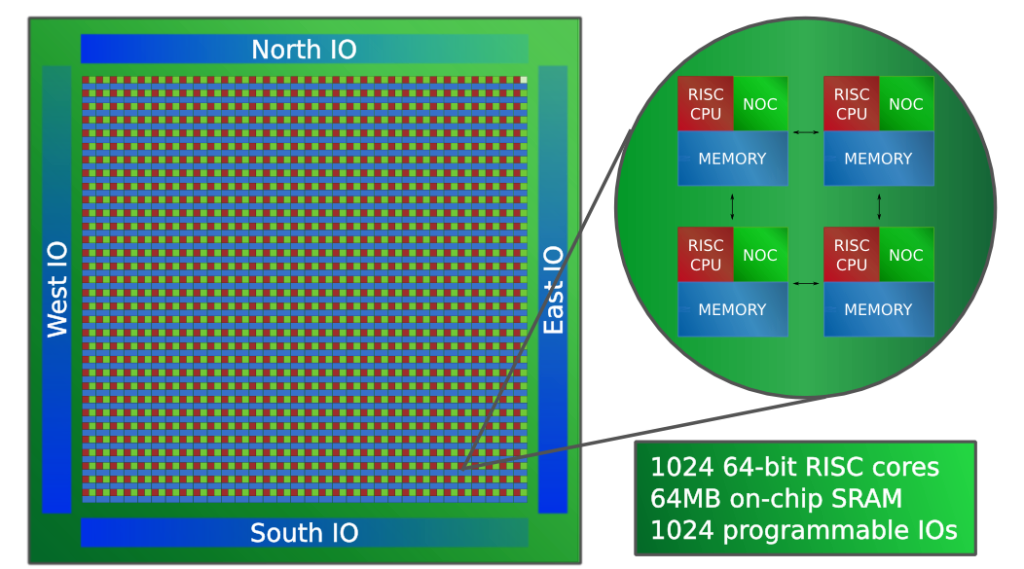

While perhaps our usual chips are not well-suited for these concerns with computing, the future will likely rely more heavily on tiled architectures, such as Adapteva’s Epiphany architecture, or Cerebras’ gargantuan wafer-scale chips. These offer massive core counts and massive amounts of performance. They also offer very precise control over the physical layout of data and compute across the chip, offering huge efficiency gains over the standard approaches of large, purely heuristic-driven caches.

The tricky part is that I’m not sure we’re ready to program these things yet, at least not for anything beyond simple operations on large, flat arrays. As I’ve described above, there’s a lot going on and a lot of complex factors to account for.

Of course, we’ll never be ready for a change like this. Once a good, general-compute-oriented tiled architecture makes its way onto the market and gains enough users, new branches of computer science and code optimization will flourish, and likely many decades of experimental work will follow. These architectures will likely age like wine, getting more capable as people gain a much deeper understanding of how to properly optimize them.

Beyond that, it’s hard to say what computing will look like far into the future. While tiled architectures seem to offer a close-to-optimal approach for linking cores together, there’s a great deal about how individual CPU cores are currently designed that I doubt is actually very optimal, and is more of a bunch of fairly contrived and rigid ideas that people have locked themselves into.

The computers of the distant future may look very different from those of today, but we at least have some guidelines from physics to sketch out very rough ideas about them.