Infrastructure and General-Purpose Tools

What "Ubiquitous Computing" got wrong

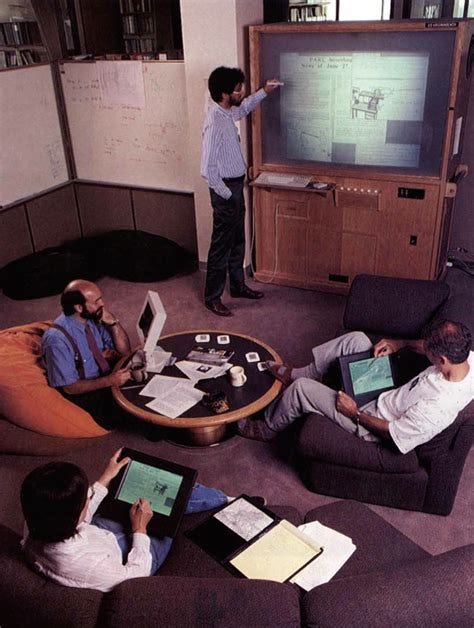

Xerox PARC was a very influential source of innovation throughout the 1970s, 80s, and 90s. They pioneered a large number of ideas around human-computer interactions, and often were better at developing ideas than turning them into products before their competitors. In 1987, they hired Mark Weiser, who quickly became head of his own research lab internally, developing his ideas of “Ubiquitous Computing”. He was eventually promoted to CTO in 1996 before his untimely death in 1999.

Ubiquitous Computing was a philosophy of computer interface design that imagined computers fading into the background, becoming almost invisible. As Weiser saw it, people don’t set out to interact with a computer, people set out to solve a problem. The computer is merely a means to an end, and much of computer design just gets in the way. Weiser also often made analogies to written language - we barely notice the thousands of objects all around us covered in written text, nor do we think much about how we interact with these things, we do so intuitively. The goal was to design computing systems that would behave similarly.

Weiser’s vision was that this would be accomplished by having a spectrum of different kinds of computers - “computing by the inch, the foot, and the yard” as he put it. More specifically, this involved computers called tabs, pads, and boards.

Tabs were imagined like digital post-it notes, and could function as ID badges for employees or objects, PDAs, common functional items such as calculators, or controllers for appliances such as smart thermostats. There was also an idea of tabs being embedded into a wide array of household items, perhaps with beepers in order to help locate lost items.

Pads were imagined like a sheet of scrap paper, or a magazine or book. They were meant to serve as devices for individual work, somewhat like tablets of today.

Boards were imagined like chalkboards or whiteboards, meant to fascilitate group work and interactions.

Another core idea was that these devices would all talk to each other across the internet, and that devices would largely understand who you are (perhaps by scanning your ID badge tab), and that any device would be able to grant you access to all your files based on your identity. You wouldn’t have much of a personal collection of tabs, pads, and boards, but rather you could walk up to any such device and start using it as though it were your own.

This of course would be an incredible security nightmare, but these were idealistic people in idealistic times. Some attention seems to have been given to security with talk of encrypting files over the internet, though it’s hard to imagine how this would have really made much of a difference without making the rather poor assumption that every device is completely trustworthy.

Ubiquitous computing was also made explicitly opposed to two popular ideas in computing at the time - Virtual Reality and Personal Computing.

VR was opposed on the grounds that it attempted to have the computer replace the outside world entirely rather than augmenting people’s interactions with the world. Spending all day in a digital fantasy world doesn’t put food on the table. I think this is a fair critique of VR and likely a major contributor to why it still to this day has yet to really take off.

Personal computing was seen as an arcane and unintuitive form of computing, compared to how “scribes once had to know as much about making ink or baking clay as they did writing.” Weiser saw it as merely a primitive transitional step, one that isolated people in their own little worlds, and that no one in the future would use for the same reason that no one has a single, extremely important book they carry around everywhere for all their paper-based needs.

The Limits of Simplicity

Every object you interact with has a sort of language embedded into it. You can enumerate some set of possible interactions, which can be thought of as symbols, and sequences of interactions or symbols behave like statements or conversations which result in particular outcomes. At least in the abstract, this applies just as well to interacting with humans and computers as it does with, say, a hammer, or a pen, or a piece of clay. Our minds are filled with many bits of knowledge of the form “do W, X, and Y, and then Z will happen” that guide our interactions with the objects around us. The space of all of these objects can be imagined as akin to a finite state automata, a big flow chart, or a descision tree, which our minds navigate as a series of actions meant to arrive at a planned outcome.

I suspect that the human brain models its interactions with tools in much the same way it models conversations, through this kind of linguistic model. The low-level circuitry in the brain’s cortical columns show no apparent difference between humans and nonhumans or linguistic and non-linguistic brain regions. It’s the same general-purpose thinking algorithm, just applied to a new problem.

If we assume a practically finite number of potential interactions with an object, and perhaps assume some locality and other factors that make some interactions more convenient than others, we can imagine some enumerable space of interactions. We can augment it with a cost model - longer sequences of actions tend to be more expensive, complex, and/or time consuming to perform than shorter sequences. There are also information-theoretic constraints. If there are two possible actions we can take with a given tool, and we want a million distinct outcomes, then it is not possible to create a language where there are not at least some outcomes which require log2(1,000,000) or ~20 actions to reach. In practice, many sequences of actions may produce identical or useless results, and so accounting for this redundancy will often increase the number of actions required to reach the average desired outcome.

A little redundancy can of course be useful, as having multiple ways to accomplish a task can enable personal style and flexibility in learning, as well as creating flexibility for forgiving the occasional mistake.

Perhaps some cases involve continuous systems where the state space may be technically infinite, though in practice a combination of the finite resolution of the human mind, the tendency of continuous systems to map miniscule changes in input to miniscule changes in output, and the fact that the exceptions to these cases can be modelled as merely having a random element beyond human precision to control, all together make such technicalities a non-issue.

Using this information-theoretic constraint, we can see that with any given tool the complexity of the average interaction or task is directly limited by the number of distinct outcomes that are possible. We can fiddle with the design of our tools to shift what is and isn’t convenient to accomplish, squeezing out redundancies and inefficiencies where they exist, but there is always a fundamental limit on how expressive we can really be for a given amount of brevity.

The Case of General-Purpose Tools

If we have a constrained problem with a manageable and finite number of possible outcomes, defining a simple and efficient interface isn’t too hard. However, as we increase ambition and utility, interactions get far more complex.

Computers are general-purpose machines, that’s their whole point. There is a vast and effectively infinite range of behaviors and functions we could achieve with a given computer, and to start pruning these options in any meaningful way would eliminate much of the point of the computer.

If we wanted to create a “language” to optimize our interactions with computers, to reduce the average number of actions required to get a computer to perform a task, where do we even start?

There is a “sport” among programmers called code golf, in which the goal is to write a specific program using the absolute least amount of characters possible, no matter how unreadable or bizarre the output. Seeing as some languages tend to be more verbose than others, there has tended to be a metagame to it around language choice. Eventually, “code golf languages” were developed primarily for the sake of exploiting this. Such languages tend to do funny things such as defining single-character functions for solving common code golf challenges, or defining an empty source file to be equivalent to a hello world program, making the most common code golf challenge require literally zero characters (something a little hard to beat). Other design choices are often made around minimizing the file size of other common code golf challenges with zero regard made for readability. In a narrow sense, these languages are theoretically highly optimized and concise, but in practice they are optimized for a game with little practical value.

As mentioned before, a little redundancy can often be a good thing.

If the point of a computer is to be general-purpose, to be capable of a very wide variety of things, to be capable of nearly anything, then there really is no one central goal to optimize around. The most fair thing to do would be to pick some basis that is not too inconvenient to implement many common things with while optimizing for something practical and fundamental such as energy efficiency.

Instead, the real game is not in finding the one weird trick that enables us to speak single sentences into our computers and have the precise, often complex outcome we want to land at our feet with close to zero effort. This limits detail in specification, and often leaves us with undesireable results on any underspecified dimension.

This line of reasoning can of course also be used to pour cold water on the many pipe dreams around things like declarative programming and prompt-based AI, but for now I’ll leave that as an exercise for the reader to contemplate.

So the game of finding the one weird trick that makes all our interactions with computers extremely terse and efficient is largely a fool’s errand if we take it too seriously - undoubtedly there are useful innovations that can and have been found, but it will never give us the kind of magic that so many dream of. Rather, the real game is the one we’ve been playing all along - infrastructure and customization. Tooling. Personalization.

Everyone has a different set of tasks they need to use their computer for, and just as people organize and customize their house and possessions to help them better accomplish their work and chores, they do the same with their computers. The way to build something that is both general-purpose and that is convenient to use is to build something that conforms to whatever the particular user needs to use it for.

The software you have locally installed on your machine doesn’t just reduce how much you need to download on the fly, but also reflects the kinds of problems you spend your time working on. Any convenience that comes from using something already on your machine rather than something that must be installed before use is something that optimizes for your use and makes you more productive. The machine conforms to your personal needs, and thus becomes more convenient for you to use than some standardized, one-size-fits-all solution. It may be less convenient for someone else to use, but who cares? It’s yours.

If you’re building software, you’re doing so with a vast stack of development tools and code libraries that make things more convenient with the assumption that they represent a solution to a problem that you’re likely to reuse. If you have a package manager or other cache of libraries that you specifically have downloaded for your coding needs, you’ve optimized this system around your personal convenience. Someone else will likely have a very different personal code collection.

The way people use internet browsers, with tens or sometimes hundreds of open tabs reflects a similar kind of use. If your browser crashes and you lose all those tabs, it may be awfully inconvenient to reconstruct all you had built for yourself for the problems you were working on. You had a mental map of what kinds of tabs you had open and how to get to them all, though some of that knowledge was probably still stored by the browser. Much of that map becomes second-nature and intuitive as you build and maintain it, at least for you.

Perhaps there’s some inconvenience from browsers not being designed with the goal of juggling so many tabs, but that’s a different problem that’s solvable with better browser design.

If you have a general-purpose tool, where little can be made convenient by default because of the infinite breadth of potential functionality, the way you make it convenient to use is to build structures within it that compress the paths you most frequently take so that it is convenient for your personal purposes.

Back to Ubiquitous Computing

There are some interesting and nice ideas to be found in Mark Weiser’s work. His ideas have been influential in some corners of design around IoT and cloud computing. I wouldn’t be surprised if there are a few remaining gems in his work that have yet to be given wide adoption in the right context.

I imagine however that the balancing act needed to maintain computers with the level of personalization necessary to be meaningfully and maximally useful while also opposing ideas from Personal Computing would have proven difficult if Weiser would have lived long enough to continue his work that far. The analogies with paper and similar items only can go so far - paper is useful for writing and drawing, but its uses are also vastly more limited than computers. If it were not, we would just keep using paper for everything.

Effortless simplicity of the kind that Weiser dreamed of is incompatible with generality. You must pick one or the other, and the only obvious compromise is with a system that gradually conforms to personal needs, which brings us right back to personal computing. Perhaps there were some ideas around using ID badge tabs and personal file access that could have made that work, but isn’t that just personal computing with extra steps?

Sometimes people get too attached to an idea because of how nice it seems that they become blind to its flaws. Oftentimes grand, simplifying ideas don’t actually pan out that well - the world is far too complex and any structure requires assumptions to be made, assumptions that will not hold across all situations in the world. Any broad vision that “everything must be done in this way” is going to eventually run into friction and contradictions in some cases.

Excessive standardization in the face of a very complex problem is doomed to fail.

If there were a constrained, limited range of usecases that covered 80% of what people use their computers for - social media, Youtube/Netflix, word processing, spreadsheets, maybe a few others - perhaps some approximation of this vision could be achieved while still letting the hardcore users use their laptops, desktops, and supercomputers for the rest. Perhaps that would also be limiting what computers could evolve into though, limiting our imagination of what computers could be to mere office supplies, crystalizing things too early.

For those who do not follow my Twitter, I recently announced that a few friends and I are launching Possibilia Magazine, a literary magazine for optimistic, realistic, near-term science fiction. The overall goal is to offer brighter alternatives to the abundant, bleak depictions of the future that are so often presented in media, while also being able to showcase esoteric and new technologies and ideas that we feel get far too little attention. If you’re interested, you can join the mailing list here to be alerted of updates. We also have opportunities for writers and artists for those interested.

This is a crazy idea I had in the summer of last year that has unexpectedly snowballed into something that’s been gaining a lot of traction and getting a lot of amazing people involved. I honestly never expected things to move this quickly or get this much attention, and the result is shaping into something far more ambitious than what I originally dreamt up last year.

It’s also been keeping me a bit busy lately, which explains why my substack posts have been so sporadic. I do have a few articles in the pipeline though, and I’m still spending a significant amount of time on coding projects, so you can expect more frequent content here in the near future.