Is CMOS All There Is?

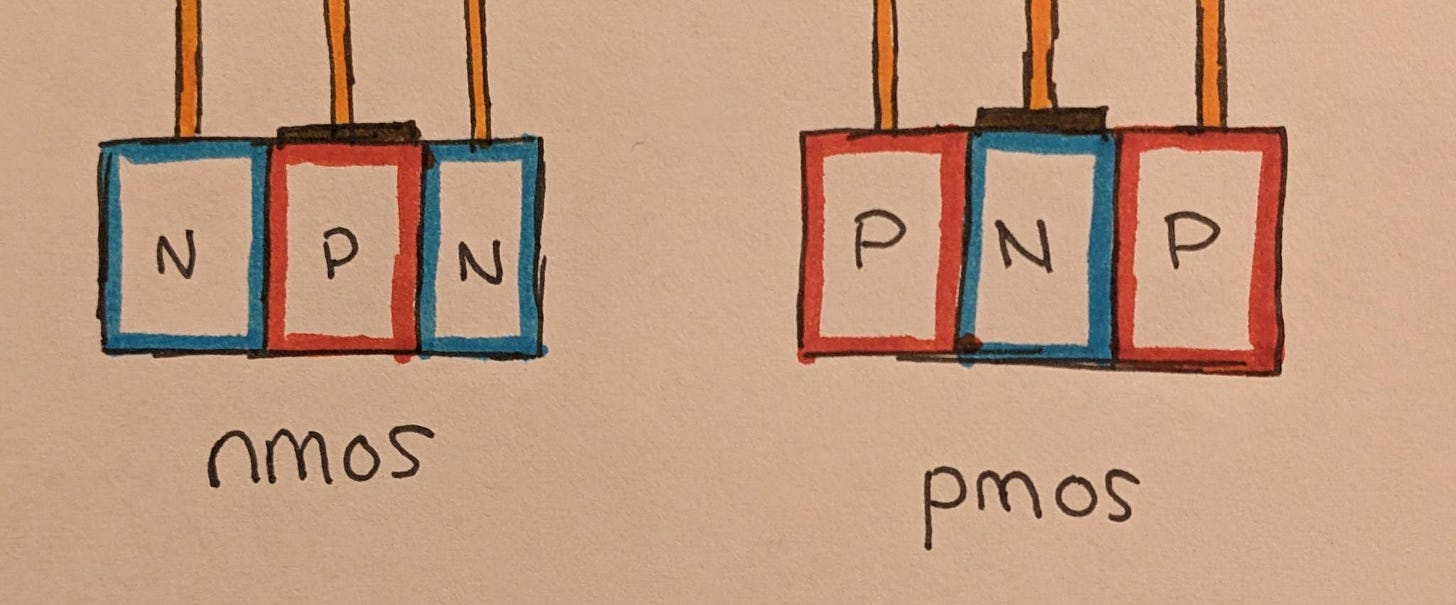

Not all transistors are the same. There in fact are two main types; PMOS and NMOS. A transistor consists of three alternating regions of semiconducting silicon, of which there is two types; P-type and N-type. Whether these three conjoined regions are PNP or NPN doesn’t really matter if your only goal is to make a transistor, and these two options form create two different types of transistors.

While it’s possible to build useful circuits using only PMOS transistors, or only NMOS, nearly every microprocessor today is made exclusively with CMOS logic, which relies on a combination of the two.

There are situations where a PMOS transistor will use large amounts of power where an NMOS transistor consumes very little, and vice-versa. CMOS combines the two in a way that cleverly exploits the interaction of their electrical properties to get the best of both worlds; a type of computer logic that consumes the least amount of energy possible in both situations.

NMOS transistors happen to be a bit faster in practice than PMOS, and combining the two into CMOS creates something in the middle - faster than PMOS logic, but slower than NMOS logic. For this reason CMOS didn’t really take off until the 80s, with people preferring NMOS before then. For many circuits, CMOS also can require twice as many transistors, another penalty.

Traditionally, these transistors can be composed into logic gates like AND, OR, XOR, and NOT. These gates can then be composed into much larger circuits to perform more complex and useful computations.

The traditional method for this is sometimes called static logic, but one intriguing fact is that this is not the only possible way to build such circuits.

Dynamic Logic

One example of an alternative is Dynamic Logic, or its more modern variant Domino Logic. This is a very different style of digital logic, based around a few different tricks.

One trick relies on NMOS’s speed advantage. By making sure the critical path through a circuit is primarily NMOS transistors, and the PMOS transistors are left mostly off to the side, dynamic logic can allow clock speeds around double that of static logic.

Another trick that dynamic logic uses is to use the clock signal to assist in computation. Half of each clock cycle is spent with the clock wire set to 0 (low power), and the other half has it set to 1 (high power). This essentially splits the clock cycle into two smaller phases, and which pathways are open or closed can change throughout a clock cycle.

A third trick is to use the wires themselves as computing elements by exploiting their capacitance. The aforementioned different phases of a cycle can open and close pathways for current to travel through the circuit. The first phase of logic can dump electric charges into a few wires, and then open up some gates to allow that stored charge to power a different set of circuits during the second phase.

There are some tradeoffs here of course. Compared to static logic, dynamic logic is harder to design, often uses more power, can be more susceptible to errors from cosmic rays (much less reliable when used in space), and can only run at high clock speeds because the signals stored in the wires rapidly dissipate. With that said, it’s still a common tool used in a lot of CPUs that run at high clock speeds.

Beyond?

I was having a conversation with a hardware engineer a few weeks ago, and mentioned my excitement at how many basic assumptions in computing my current hardware startup allows me to question. His response was that he wished there was more research into alternatives to Dynamic/Domino logic.

If there are two ways of doing this - static and dynamic - how many more alternatives are we missing out on? Then dynamic logic has its variant domino logic - are there more possible variants? Why aren’t we exploring those?

Then why not ask some more daring questions? Maybe there’s only two types of transistors, but are there other semiconductor devices that could be easily manufactured alongside them that could also serve as useful tools? One obvious example would be a diode, which consists of only two semiconducting regions with a single p-n junction. A less obvious example might be something like a thyristor, which has four regions and three junctions.

Then there’s the whole question of quasiparticles. One of the best ways to think of how transistors work is that N-type semiconductors are full of loosely-bound, “free” electrons, whereas P-type semiconductors are full of “holes”. These holes are nothing more than semistable gaps that electrons can easily slip into, but they can be reasoned about themselves as though they were a particle themselves, a type of emergent anti-electron. Hence, a quasiparticle. The interactions that occur when electrons and holes meet create the variety of effects that make transistors and related devices work.

In recent years though, a menagerie of different quasiparticles have been discovered, from the phonons that serve as individual quanta of sound/heat to “cooper pairs” that allow for the existence of many types of superconductors. If all of modern computing is built upon one type of quasiparticle (holes), I expect there’s a good chance new types of logic could be built by combining holes with other quasiparticles.

Fundamentally, computer logic is like a LEGO set. Perhaps you can reduce things down to a single, universal block that any shape can at least be approximated with. However, introducing a wider variety of options creates a richer combinatorial explosion of possibilities from all of their interactions. Keep in mind, there’s a good reason why LEGO has a much wider variety of pieces than they did a few decades ago.

Almost no chip made in the past 40 years uses fewer than two types of transistors, even if they could. Many chips exploit dynamic logic tricks to turn wires into a third active computing component, even if it’s not quite as dynamic as a third type of transistor.

I don’t (yet) have the sophisticated physics knowledge to know what type of bizarre new components could be useful here, and the engineering effort necessary to try out 5nm transistor-thyristor logic is high enough that it’s out of the scope of anything I’d have the ability to put in a chip anytime soon, so I haven’t bothered to figure out what new possibilities it might open up. New approaches to CMOS logic is something on my mind a little, but I expect it’ll be a while before I have anything interesting to say on that front.

With that said, as it gets harder to find performance gains from merely packing more and more transistors onto a chip, having new types of components, even with more niche logic specialties, will become the next-best option. After all, at the end of the day we’ve only explored an extremely tiny fraction of the space of arrangements of atoms that are computationally interesting.

I expect this to become a more actively explored frontier of hardware design, and sooner or later I expect it will bear some very interesting fruit.

Originally I was planning on putting out a different article today (originally yesterday), but that one simply wasn’t coming together very well. It needs a bit more time, and so a short article on CMOS logic felt like something interesting to write and easy to throw together at the early hours of the morning.

If you enjoy my writing and want to support my work, feel free to subscribe and share. If you’re on the substack app, you can also hop into the new chat feature and send me some feedback or ask me some questions.