Moore's Wall

One assumption that engineers have been able to make for most of the past few decades has been Dennard scaling; that making transistors smaller means they also use proportionally less energy. This assumption however stopped being true around 2005, and modern chips now include increasingly large amounts of “dark silicon” - circuitry used only sparingly to keep power budgets under control and prevent chips from overheating.

As transistors get closer and closer to the atomic scale, the mechanisms that allow them to function break down. Circuit leakage causes the breakdown of Dennard scaling. Quantum tunneling places lower limits on the width of the gate.

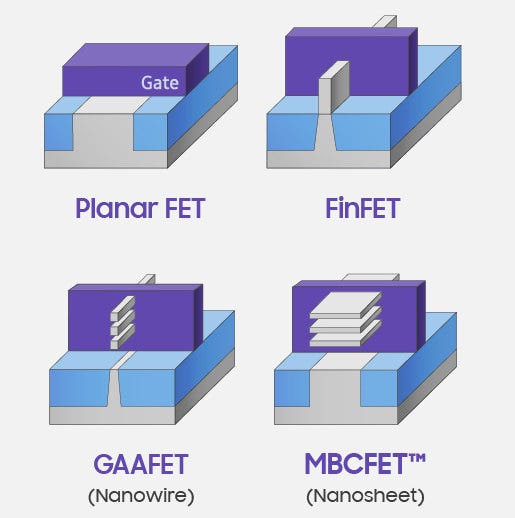

There are tricks that can circumvent these problems, such as introducing exotic metals like hafnium into the dielectric insulator around the gate, or manipulating the geometry of the gate - wrapping the gate around the channel to maximize surface area while minimizing size.

This helps, but at the cost of dramatically increasing manufacturing complexity for marginal, one-time gains. This isn’t like the old Moore’s Law where we scale transistors down with the same trick every time; now we must get creative and are forced to come up with new tricks every time.

The tricks going into the next few generations of transistors are starting to get really crazy. The channel between the source and drain of the transistor will soon consist of several stacked “nanowires” and “nanosheets”, allowing the gate to be wrapped all around the wires (hence Gate-All-Around FET, or GAAFET) to maximize contact area.

IBM and Samsung are developing VTFET - Vertical Transport Field Effect Transistors. These transistors effectively flip the transistor on its side to allow more dense packing, once again at the expense of much more difficult manufacturing and likely more dark silicon.

Then you have Intel, which is not just trying GAAFET (which they call RibbonFET), but also is experimenting with a limited form of stacking transistors as well as “power via technology”, which involves etching holes through the backside of the silicon die to provide power through the back, reducing wiring congestion for logic.

None of these options are really that unintuitive; many of them are things I distinctly remember thinking about when I was first learning about transistors as a teenager and letting my imagination run wild. After a while you get bored imagining just making transistors smaller and smaller the simple way, and then you notice that you eventually hit atoms and look for other ways to scale things.

I’m sure I’m far from the first person to think of these ideas in the past 60 years. The reason why no one has made commercial chips with stacked transistors, with power routed through the back of the die, or with absurdly complex multi-layer ribbon transistors is because those kinds of changes are absurdly difficult. They’re also not repeatable. They’re only on the table now because traditional transistor scaling is no longer viable and everyone is scraping the bottom of the barrel for the last few gains possible.

Recently, TSMC even announced that their 3nm node will provide little to no advantage on SRAM scaling over 5nm. Seeing as most chips today involve very large amounts of SRAM (think caches, registers, latches between pipeline stages, etc.), for many chips 3nm will not be much of an improvement over 5nm at all. Once the rising costs of these new generations is factored in too - a result of their rapidly rising complexity - switching to a newer transistor node makes vanishingly little sense in any situation.

While there is perhaps some fuel left in the tank, our beloved silicon transistors manufactured with photolithography won’t take us too much further, with scaling likely halting this decade. Even if there’s still some techniques for scaling further, at some point economic barriers become too great, and it makes much more financial sense to buy two old computers than to buy one new one.

This is the end of Moore’s Law. What comes next is strange.

Landauer’s Limit and Moore’s Wall

In physics, there is a known limit for the energy efficiency of nonreversible computation, called Landauer’s Limit. There is a lower limit on the amount of energy required to flip a bit (though exactly how much is dependent on ambient temperature). Near room temperature, this tends to be on the order of a couple zeptojoules, small enough that trillions of bits could be flipped per second while requiring only nanowatts of power.

We are nowhere near this. In fact, even if Moore’s Law were somehow to continue on undisturbed, giving us decades more of exponential growth, we are only a little over halfway there.

The implication is that, even after Moore’s Law slows to a halt, there will be potentially one more equally large computer revolution awaiting future generations, waiting for the perfect storm of an engineering path and an economic engine to carry it to fruition.

However, our existing paradigm of computing is a double-edged sword. On one hand, it has taken us extremely far and has largely created the modern world. On the other hand, it took an extraordinary amount of resources to do the research, engineering, and manufacturing to get here, which next must be outdone. Going further is very difficult, regardless of the technology; even if a new technology comes along that can surpass silicon transistors, silicon will still have a 60-70 year, multi-trillion-dollar head start. If there isn’t a viable path to bootstrap a new computing paradigm and surpass silicon without it taking 60-70 years and trillions of dollars just to break even, it may never happen at all.

Moore’s Law is as much about economics as it is about engineering. It doesn’t just matter that we can make more efficient chips - you could do that with a decades-old electron microscope if you had enough patience to build a whole chip one atom at a time. It also matters that it’s economically feasible to produce these chips, and that the next generation of chips aren’t just faster and more efficient, but also cheap enough to justify buying one new computer as opposed to two old ones.

Even if we found a technology that looked promising and were willing to dump trillions of dollars and decades of R&D into it before a single new computer ever hits the market, it’s very difficult to say if any new technology will actually pan out that far in advance. People have been predicting the end of Moore’s Law for decades and there were plenty of good reasons for people to expect it to have halted a long time ago. It’s hard to know which hard problems are even possible to solve until you actually solve them. We could dump trillions of dollars into something that then hits its own wall before ever reaching the potential we had hoped for.

What stands before us is what I call Moore’s Wall. We know that there’s another computer revolution waiting for us in the future, but Moore’s Law has dug us so deep into a local minima that it may be many decades or even centuries before a new technology comes along that can take us any further.

Past Moore’s Wall

There are a few known techniques that might allow us to go further eventually.

There are a few silicon alternatives out there, but fundamentally none of them seem to offer scaling that’s really that much better than silicon. Perhaps there’s something I’m unaware of, but I don’t expect this to buy us much more than one order of magnitude, whereas reaching Landauer’s Limit would require around six.

Modern CPUs are hillariously inefficient and have plenty of room for optimization - I’m personally working on this problem and I estimate it could buy us an extra 3 orders of magnitude. With that said, Landauer’s limit is about the cost of flipping a bit, not how efficiently we make use of those bit flips, so this doesn’t really count. There’s also an enormous amount of room for optimizing software, but once again, this is a case where Landauer’s Limit just doesn’t apply.

Even after hitting Moore’s Wall, we’ll still have a lot of room for optimizing what we’ve built out of transistors, even if we run out of low-hanging fruit for optimizing the transistors themselves.

Another factor to note is that Landauer’s Limit only applies to irreversible computing. Reversible computing is one approach that could be used to circumvent the wall somewhat, but only in certain scenarios.

Some computations are reversible, and some necessarily irreversible. Any algorithm that does any irreversible computation will produce ancilla bits, and resetting those at the beginning and end of each computation necessarily uses as much energy as what an ordinary non-reversible computer would use anyway.

For computations that can conform to the tight requirements of reversible computation, we can perhaps circumvent Landauer’s limit. For computations where this is not possible, we can do no better than whatever our most efficient non-reversible computation paradigm is.

Quantum computing, even if it can be made to work (and this is an enormous if), is in fact a subset of reversible computing. Everything that’s difficult about reversible computers also applies to quantum computers. However, the quantum aspects make many parts of this already hard problem even more difficult. Reversible computers could be made semi-reversible to alleviate some of their difficulties, though many of the techniques for doing this are fundamentally impossible with a quantum computer without collapsing the quantum state, nullifying any quantum benefits.

A more in-depth and technical exploration of reversible and quantum computing will be the subject of a future article.

Another option is some form of chemical computation, possibly taking some cues from biology. Chemical interactions can be very close to Landauer’s Limit if we’re storing and manipulating data on a molecular level. Making this computation general-purpose, programmable, error-resistant, etc. would be a serious engineering challenge however. I could see this as the most likely way around Moore’s Wall, but I expect it will be many decades before we can build up the theoretical foundation to wield such tools effectively.

Given the many decades, perhaps centuries, that it may take to circumvent the wall, it’s likely that other exotic computing technologies will be discovered that we can’t even imagine yet. Science fiction and research papers have imagined various forms of quark plasma or black hole computers in the past, though these in particular seem pretty far-fetched.

If a black swan computing paradigm emerges, my bet would be on the technology being unlocked more by a breakthrough in an algorithmic paradigm, making some seemingly useless, noisy system suddenly viable as a computing substrate. I have some rough ideas on where we might start with that (a discussion for another time), but I wouldn’t expect them to get to that kind of utility anytime soon.

I expect though that the strangest part of Moore’s Wall will be the psychological and cultural impact it has on the computing industry. What strange way does knowing that a second computing revolution will happen again, someday, but that our current computers as powerful as they are have all but guaranteed it won’t happen for a very long time?

In the meantime though, and to end on an optimistic note, perhaps there’s a silver lining here. We won’t be getting performance for free again any time soon, which will force us to go through what we’ve built over the past few decades and start cleaning it up and optimizing it. Many situations where we’ve been lazy we will now have to gain a much deeper understanding of computation, which will provide us its own kind of computer revolution, but one of a different, perhaps much stranger kind.

I’m back from my break for the past few weeks. I was planning to have a more ambitious article out this week, showing off some cool visualizations, but coding always takes longer than planned and all I could accomplish on time was something about halfway to just one of several plots I wanted to make. That article might take a couple more weeks.

At the very least, you can expect a lot of cool visualizations this year, it’ll just be a little longer before they really start showing up.

The past few weeks have been a bit busy on my end; writing lots of code, starting a new media project with some cool people, and splitting up with my cofounder over some creative differences (him and I are both still building our hardware, we’re just doing it separately now).

I’ll hopefully give out some more information on the media project over the next couple months - it’s something I’ve wanted to do for a while and now I’ll not just get a chance to do it, but I’ll actually be getting paid a salary. I’m also going to need to put together a writing team eventually. I’m excited about it.

Luckily most of my projects have heavy overlap, so nothing is a real distraction and the code and ideas just get reused elsewhere in a different context. It’s just a different path to getting there, and maybe accomplishing a few other cool things along the way.

Thank you for reading this week’s article, and I hope you’re excited for the next year as I am!