Some Thoughts on Future Programming Tools

Or, The Lure of Graphical Languages pt 2

After my previous article, I received messages and emails from multiple people giving their responses and perspectives, so I think it’s worth elaborating a bit more, and going into a little more depth on the more positive and interesting ideas around the future of programming that I have.

This certainly won’t be an exhaustive list, mostly just elaborations on ideas adjacent to last week’s article on graphical programming. I won’t be covering AI tools here for example, that will be a separate article in the future.

Some Legitimate Wins for Graphical Programming

Graphical programming has seen some success in education. Part of that benefit seems to be from reducing the intimidation factor that text-based programming seems to have on non-programmers. I don’t find this advantage particularly interesting from a technical perspective, as it’s more of a cultural issue.

A more interesting case that graphical programming sometimes can handle well, especially as an educational tool, is short feedback loops and interactivity. If the visual aspect of the program is primarily focused more on the output rather than the code itself, that can be more useful.

One reader who reached out mentioned their fondness for the Logo language, though granted - Logo is a text-based language focused on generating graphics rather than a purely graphical language.

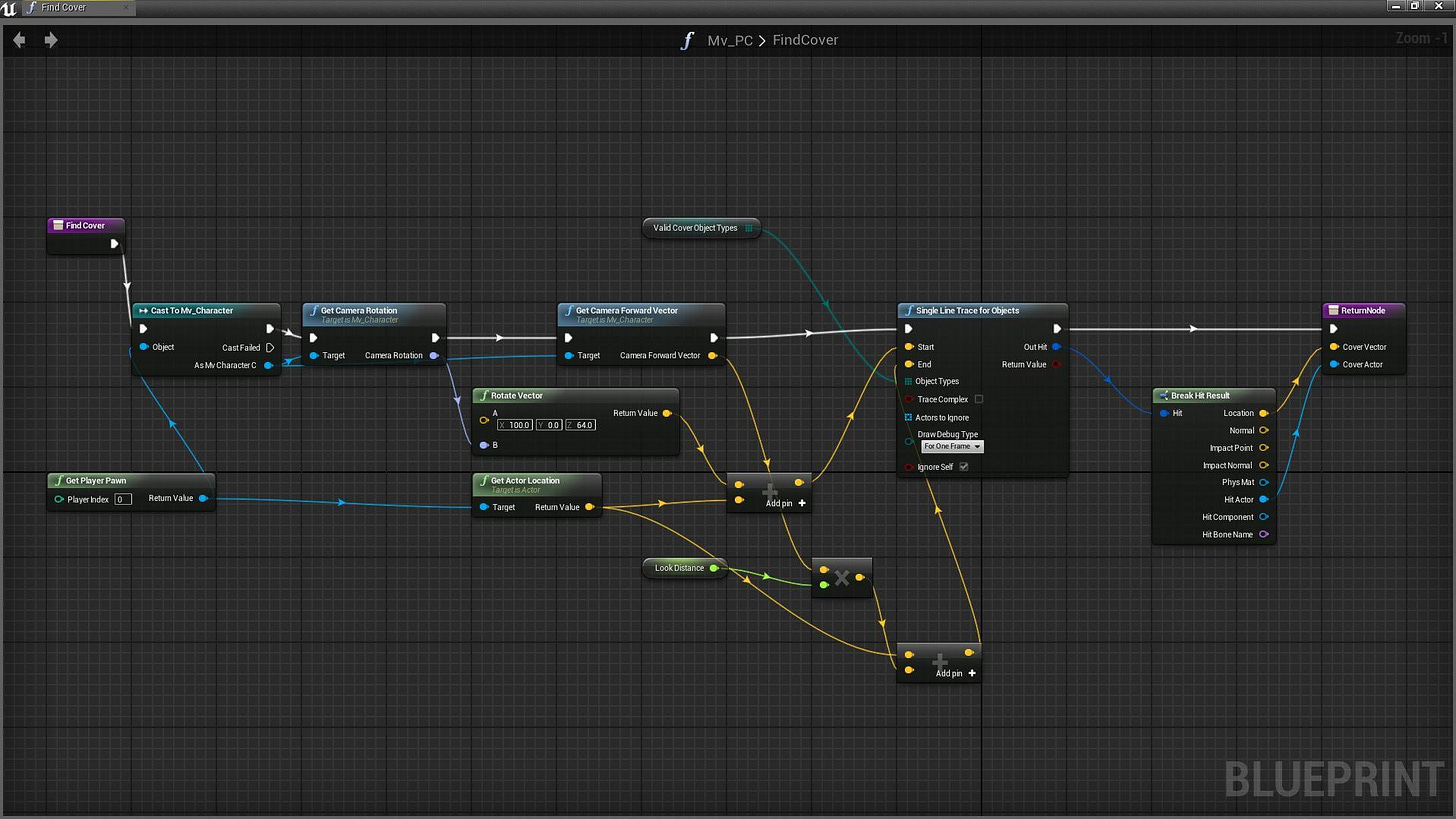

Some game engines have also been using graphical scripting and shader languages. These also seem to be reasonably successful, though note that they are a much more constrained situation than normal programming languages.

Making your programming language a bunch of boxes and lines works better for simple shader functions meant to be written primarily by artists rather than a full programming language. The limited size prevents such graphical code from becoming an incomprehensible hairball.

Interactivity

Logo and perhaps some similar languages clearly get the interactivity part correct - getting more feedback from programs is definitely something that deserves a lot more engineering attention.

Some interactivity already exists in programming languages, and has for a long time. REPLs have been a common feature in many functional languages for decades, though unfortunately they don’t scale well to large programs.

There has been some discussion in the past few years, popularized by people like Bret Victor, of more advanced alternatives to REPLs. Victor’s specific idea is print out the contents of variables while code is being written in a special interactive window.

This definitely seems like it would be useful for some simple applications. Bret’s demo here shows it detecting a lot of low-hanging fruit while he writes his demo code.

One critique I’ll give of this idea is that it doesn’t clearly scale well beyond printing numbers and small strings, and the mechanisms Victor shows for dealing with loops probably won’t scale well past a few iterations.

Granted, similar ideas to this are used pretty regularly in notebook systems like Mathematica and Jupyter. Those systems are clearly much more successful and have been around much longer than Victor’s version - the meaningful difference is that notebooks only print the data you specifically request to be printed, and offer proper visualization tools for many common types of data (though probably are trickier to use if you have your own custom data structures). Victor’s version, which tries to print everything, is trying to cover many hard cases that notebooks choose not to cover, and doesn’t have many clear ideas about how to scale to the needs of these harder cases.

Victor also has some other ideas with flashier visualizations and interactive tools, though I’m not convinced any of them really generalize well. Maybe you can have a nice tool for visualizing circuit properties or animating simple videos like he shows in that demo. I haven’t seen any ideas from him however that seem all that useful for more general code.

Galois Emulation?

All of these interactive emulation systems share a common limitation; while they’re great for rapidly debugging the behavior of a program on typical inputs, many bugs are the result of rare inputs that break code in unexpected ways. These rare inputs can be exceptionally difficult to find - mathematically speaking, doing so is NP-complete (or even undecideable when loops are involved).

For reference, NP-complete means it’s at least as hard as breaking cryptography in the worst case. In fact, if you write code designed to break when the correct key is passed into a cryptographic function and then ask when it breaks, by definition you have a debugging problem that is as hard as breaking that cryptographic algorithm.

If there are loops involved, especially particularly tricky ones, then it’s of course much harder than cryptography and may in fact be impossible to solve.

While this all may seem like very good reasons why such limitations must exist in our tools, the reality is of course that NP problems are some of the most bizarre things in modern mathematics, with behavior in practice that is extremely different from their behavior in theory.

Most code is meant to actually be understood by humans, and that property results alone in a lot of seemingly insurmountable problems actually being easy in practice with the right approach.

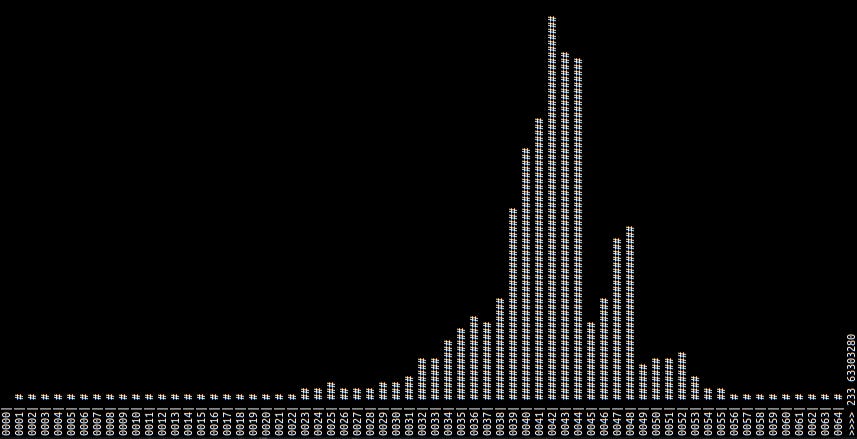

I actually just wrote a SAT solver a couple weeks ago, SAT solvers being one of the kinds of tools available for solving these kinds of hard problems. It’s not even a very efficient solver (there’s no unit propagation or CDCL, though I have some plans for more novel optimizations), and yet it’s able to solve many 64-bit random SAT problems within a few seconds while running on a single core on my laptop.

Put another way, it’s sifting through a search space with 1.84*10^19 values and reliably finding a needle-in-a-haystack solution in only a few billion (10^9) CPU instructions. This is somewhere on the order of 10 billion times faster than brute force, while still technically being a “random guessing” algorithm. Making SAT solvers faster is just about learning about the problem as you go to make smarter guesses.

I’m specifically running this on random SAT instances with a clause count chosen specifically to maximize difficulty. Random SAT tends to be much more difficult than many “real-world” SAT problems.

SAT solvers (and other exponential-time algorithms in general) are one of those fun cases where you can make claims like “this optimization made my code a billion times faster” and not be exaggerating.

In practice, SAT solvers can be a bit too precise for many common code correctness problems. Converting code into something they can reason about usually encoding every individual logic gate and wire necessary to implement the function in circuitry, and they focus on every minute, low-level detail.

SMT solvers are another tool available, which basically amount to a toolbox of higher-level logic tools that can more efficiently solve many problems, with the option to fall back on a standard SAT solver in the case they aren’t sufficient.

Another tool, particularly well-suited for looping code, is Abstract Interpretation.

In both SMT solvers and Abstract Interpreters, there is a common tool used to approximate large possibility spaces called a Galois Connection. Galois connections can be thought of as a tool for translating between a high-resolution and a low-resolution model of a problem, or even between different low-resolution models that invest their resolution differently. They are an extremely powerful tool for making many hard problems tractable.

They also however are based on a type of mathematical structure called a lattice, which as I’ve pointed out before are actually mathematically almost identical to topological spaces, strongly implying there might be a way of meaningfully visualizing them.

So imagine if instead of the Bret Victor approach of visualizing a single set of inputs flowing through your function, you could visualize a representation of all possible paths at once? This seems like it should be possible, and most of these Galois connections are not as complex as they may sound from my description here.

I’m not quite sure what this looks like yet, but the math at least looks promising.

I do plan to write an article on Galois connections at some point - luckily some of my work on Bzo right now involves writing my own SMT solver, and while it’s still pretty early in development I think I’ll have a lot of interesting stuff to say in a couple months.

Searching “How to write an SMT solver” tends to give few helpful results beyond “you should never write your own SMT solver, it’s too hard, just use Z3”, so I figure I might be able to write a few authoritative articles on the subject.

Non-Textual Programming Paradigms

I’ve written before about non-textual programming, mostly in the context of exploring approaches to programming that are not well-suited for a text-based medium in the first place.

If you want to edit an image, you open it up in a program like photoshop, not a text editor. Not that it’s not possible to edit images in a text editor, but images are very poorly-suited for human-legible text representations.

There are a lot of cool ideas in theoretical neuroscience, with plenty of unique and desirable properties that effectively come for free with the paradigm. It would be great to be able to engineer such systems, but that's a paradigm where the primitives are basically overlapping spheres in 1000+ dimensional sparse bitvector spaces. It's hard to imagine how to make that legible in a text format, but it's at least easier to imagine a more graphical approach being viable, albeit with the need for some creative ideas first.

Machine learning is arguably in a similar situation, where most of what actually defines an ML model is a giant collection of data files and only a tiny amount of text-based code. Under the hood, neural networks are incredibly geometric systems. In the long term, engineering with them (especially if we want to do so in a way that makes safety guarantees about their behavior) might require some more geometric interfaces.

Foveal Optimization

I often find that my most interesting ideas in my articles are not always explained as well as I’d like, and I usually find much better ways of explaining things in the days after I publish an article.

In my last article, I tried to discuss an idea about how our modern understanding of software interfaces seems deeply ignorant of how the human visual system works and how to interface with it effectively, as well as that things like syntax highlighting and code indentation are in fact a few of the kinds of things we should be doing more of that have somehow made their way into common practice. I had a friend reach out with some questions, not fully getting my point.

I’ll end tonight’s article with a quote from the conversation that seemed to clarify it pretty well for him:

Maybe another way of putting my point about graphical programming is that part of the problem is that you're trying to optimize for how quickly the eye can access the information it needs. The only part of your eye that can read text on your screen (assuming you don't have a gigantic font) is the fovea, which only can look at a tiny portion of it at a time. So the real problem isn't just dumping a bunch of text to the screen, but making the data legible to the low-resolution peripheral vision to minimize the amount of saccades to locate important information.

If you could blur the screen so that the text is all unreadable and still be able to quickly and reliably guess what's a loop, what's an if/else or switch statement, where each block of code starts/ends, where the loop header/branch condition is, etc., you're going to have much more readable code than if you don't have that.

That's what indentation and syntax highlighting are ACTUALLY doing.

And part of my point is that we could probably push things much further in that direction, using other tricks like leading lines to make it even more efficient to navigate code, as well as finding ways to recognize structure on even larger and more zoomed-out scales.

I definitely appreciate feedback on my articles, though don’t always get much, so getting multiple people reaching out after this last one with lengthy responses really caught me off guard. Thanks. I think I’ll remove the paid-subscribers-only requirement for the comments on my articles. That means more feedback for me, and makes it easier for me to clarify anything I don’t fully get across the first time.

Thank you for reading!

I think smalltalks and Pharo in particular do a good job of this. The introspection, debugging and visualization are top notch. The way it shows functions associated with classes (and the way it separates class side variables from instance side) offers an even higher degree of clarity. The hierarchy of inherited objects and being able to jump to variable, class and function definitions both user and system defined is awesome. The big problem with it though is that it is very OOP in a way that is still just a confusing as deeply inherited, and overloaded OOP can be. And part of me wonders if the reason Pharo has such a great tools for introspecting is simply to make up for the deficiencies of OOP. Don’t get me wrong though, I love the language, and Pharo and Smalltalk put other languages to shame in terms of it’s debuggability and introspection which it has had since the The Smalltalk 76 demo Alan Kay gave to Steve Jobs at Xerox park. And I’m not sure how you could do the things Smalltalk does without everything being an object, message passing, and late binding everything.