Some Thoughts on Neuralink

I’ve been meaning to write an article closely inspecting Neuralink and its technology for a while. After their new presentation (video embed below if you have a couple hours to spare) a few weeks ago, this seems like a good time for a detailed essay.

General Overview

Neuralink’s overall goal is to build devices, eventually fit for mass consumers, that increase the amount of bandwidth between computers and the brain. The ultimate goal is for humans to “merge with artificial intelligence.” Many intermediate goals such as “curing depression/paralysis/every other neurological disorder” are also often mentioned (usually by Elon).

Elon is evidently not heavily involved with Neuralink, and I generally don’t take much he says about the tech very seriously. He is mostly just a glorified cheerleader for the company, and I have yet to see much from him that indicates his knowledge of the brain is much deeper than what he might read in popsci and scifi, unfortunately.

This is certainly no surprise, as Elon is already very busy with his work at Tesla, SpaceX, and Twitter, not to mention his other side projects like the Boring Company. If I listen to an interview with Elon talking about SpaceX and all the complex tradeoffs with reusable rockets, it’s clear he knows what he’s talking about. With Neuralink, I don’t get that kind of impression at all.

The current implant is a device about the size of a quarter meant to be implanted into the skull with a robot that the Neuralink team often compares to a sewing machine. The implant has a number of very thin wires, studded with electrodes, that are implanted into the surface of the brain. There are over 1000 channels that can be recorded from in each implant, with analog sampling around 20kHz.

Because brain activity is very sparse, the effective number of neurons that can be tracked by a single Neuralink implant is much higher than the number of electrodes. A single neuron spike can be detected across multiple electrodes, and triangulated to a specific location. Differentiating multiple overlapping spikes can be done by comparing the precise shapes of each spike, which is why sampling is done much faster than the brain’s effective “clock speed” (20kHz vs 0.25kHz).

The current limitation of the technology seems to not be the number of electrodes, but rather the amount of compute that can be performed to extract the raw neural firing patterns from the samples measured by the electrodes. The more electrodes, the more compute is necessary. More compute means shorter battery life and more heat output (the brain is very sensitive to heat, excess heat can be a very bad thing).

The electrodes are capable of both reading and writing to the brain; writing can be performed by sending a current through an electrode to stimulate nearby cells indiscriminately. In the Q&A after the presentation, some suggestions are made that writing code be made more precise in the future, though right now it is just triggering whichever neurons are next to the electrode. This is like trying to type on a keyboard while wearing boxing gloves.

However, compared to alternative brain implants Neuralink’s implant is still extremely impressive. The raw bandwidth is still an order of magnitude higher than alternatives, and has a fairly straightforward scaling path for an extra order of magnitude or two. The implantation process, based on very thin electrodes that are carefully inserted to avoid damaging blood vessels, has the potential to be a massive improvement, as the electrodes used in other brain implants often are thick, rigid, and inserted imprecisely, causing scarring and localized brain damage over time.

Highlights from the Recent “Show and Tell”

The Dura

One hurdle that Neuralink spends a fair bit of time discussing is implanting through the Dura Mater. The dura can be thought of as a kind of thick sac that holds the brain and its surrounding fluids. It helps maintain the blood-brain barrier, and is important for preventing infections from spreading to the brain.

Neuralink historically has simply removed and discarded a piece of the dura during implantation in order to get easier access to the brain and better visibility of all the blood vessels they’re trying to avoid puncturing. This very likely is a major contributor to the concerning health complications of their test animals. After removing the dura, scar tissue grows back in its place which disrupts the implant, creating more issues (especially when attempting to remove or upgrade the implant and all of its electrodes).

Neuralink is apparently now going to great lengths to avoid removing the dura and instead implanting electrodes straight through it. This appears to significantly complicate the implantation process. They spend a lot of time talking about the need to rapidly iterate on needle design, and the significant changes they are making to the robot’s vision system, which now must not only avoid hitting blood vessels, but now must track these blood vessels through the somewhat opaque dura.

I expect this will prove to be one of the biggest technical hurdles Neuralink faces in the near future, and it will likely make or break the company’s viability for long-term human use. No one wants a brain infection.

Spinal Implantation and Vision

Neuralink’s near-term goals are mostly based around producing an FDA-approved device as a treatment for disabilities. They spend time talking about implanting not just into the brain, but also into the spinal cord.

I don’t see any immediate reasons why it wouldn’t be possible to not only restore motor function in people with spinal injuries, but to also restore their sense of touch.

I can’t imagine that the thousands of electrodes in a few implants will be a perfect substitute for the millions of nerve fibers in the spinal cord, particularly with the crude precision of Neuralink’s write functionality. With that said, it’s still a lot better than nothing.

They also spend some time talking about curing blindness. The method they propose for doing this is fairly sound; just feed in a grid of “pixels” into the visual cortex to convey an image. Existing experimental neuroscience already shows that stimulating the visual cortex creates the perception of localized flashes of light.

The problem with this approach is not whether or not it will work, but rather whether or not there’s a similarly easy alternative that works a lot better. While I will discuss some more technical critiques later in the article, I’d like to point out that the approach they describe is very different from how the visual system actually works.

The visual system in humans is not a mere grid of pixels as many people in tech tend to view it. Rather, the eye consists of a very tiny, high-resolution region called the fovea surrounded by a large, low-resolution peripheral vision. Vision is not a matter of streaming in pixels, but rather of rapidly moving the fovea around to get snapshots of the fine details of different small pieces of an image, and using the peripheral vision to decide where to look next.

One advantage of this is that it is just a dramatically more efficient use of the brain; the vast majority of pixels in an image are relatively repetitive textures or irrelevant background details. Being able to focus the majority of resources on a small, high-information-density subset of an image is just much more efficient.

Another potential benefit is that it helps embed more spatial information into vision. In general, neural networks tend to recognize textures as opposed to shapes. It’s hard to say at this point, but I would not be surprised if the brain would have a similar pitfall without the information that comes from the need to more explicitly tracking locations of objects that comes from foveated vision.

It’s also worth noting that many of the basic principles of visual art is based around techniques with composition, color, leading lines, etc. that just boil down to different ways to efficiently guide the fovea around an image to points of interest. Giving someone vision that ignores the way that the human visual system works wouldn’t just be an inefficient use of the visual circuitry that already exists, but it would also likely render this visual language that humans use to convey meaning through images largely incomprehensible.

For many things humans consider visually beautiful, both manmade and natural, a major component of this beauty is the existence of smooth curves that are easy for the eye to follow and that guide the eye to points of interest. Imagine gaining the ability to see, but not the ability to instinctively follow these visual maps. Imagine how much less beautiful the world would be.

Neuralink in their recent presentation explicitly talks about the fovea and the structure of the visual field, but then proceeds to describe their approach of streaming pixels into the visual cortex in a way that makes no mention of eye movements. The difficulty necessary to implement foveated vision with Neuralink could likely be reduced to just a few hundred lines of code; take a high-resolution image, read some pixels from a small window, read a lower-resolution version of the area surrounding the window, generate an output image by combining the two that’s fed into the brain, and then move the window around the image using some data pulled from the occulomotor cortex.

It would be a serious shame if Neuralink gives their blind customers an unnecessarily ugly and hollow experience of vision simply because their engineers don’t appreciate the differences between the human visual system and a computer screen. I hope they do recognize this and just were oversimplifying their explanation.

Device Longevity

Some time is spent talking about device longevity. One of my major concerns about Neuralink has always been my hesitancy to implant a lithium-ion battery that has a tendency to burst into flames upon contact with water directly into the warm, wet environment of my most vital organ.

I didn’t notice any discussion from Neuralink explicitly about the battery, though they did talk a bit about trying to avoid the buildup of humidity inside the device. It’s good to hear that they are at least thinking of this problem.

Neural Decoding

One of the most critical problems Neuralink must solve in order to be successful is the translation between the familiar and well-understood data representations inside computers with the comparatively alien and poorly-understood representations that the brain uses.

The approach they describe in their presentation is to train a deep neural network to extract this information. This is not only a very naive approach that teaches us absolutely nothing about how the brain works and how to properly interface with it, but Neuralink themselves acknowledge that it doesn’t even work very well, and that the brain changes so quickly on a daily basis that deep learning simply can’t keep up very well.

They show some demos of a monkey being able to type text and move a cursor around, which Elon cites as equivalent to about 10 bits per second of usable information. Compare that to the tens to hundreds of millions of bits per second they’re reading from their thousands of electrodes.

The proper solution to this problem is to more seriously study how the brain works in order to read its activity in a form that’s closer to how it naturally computes, not to force some human-oriented model onto the brain and celebrate when it reaches a millionth of its potential.

Toward the end of the presentation, Elon suggests that Neuralink’s role is merely to offer hardware and that other companies and labs can worry about building applications. This is perhaps reasonable to some degree, though in this case I think we should expect Neuralink’s future work to be pretty underwhelming in this area, and achieving only a tiny fraction of what the hardware theoretically might offer.

Some More Technical Critiques

Deep Brain Access and the Thalamus

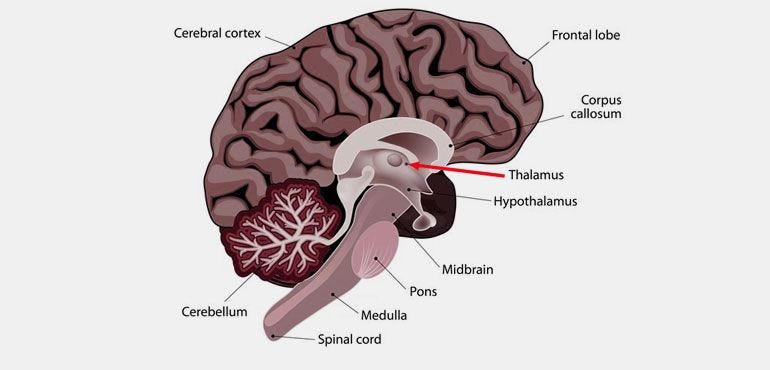

Neuralink at the moment is primarily focused on interfacing with the cortex. This is a pretty easy decision; the cortex is the most accessible part of the brain, and where most of the interesting stuff is going on. Unfortunately there are many other important brain regions that would be very useful to connect to that are much less accessible.

For example, many of the brain disorders Elon likes to mention as things that could be “cured” by Neuralink - parkinson’s, depression, etc. heavily involve parts of the limbic system such as the striatum and hypothalamus. These are located deep inside the brain, and are not something I see being easily accessible to Neuralink any time soon.

There definitely seems to be a gap in how well-understood these brain regions are compared to the cortex; there aren’t really any good ways of accessing them in a living brain. Deep brain stimulation is an existing option, but one with a high probability of complications.

If Neuralink can someday manage to implant high-bandwidth interfaces into deep brain regions without any complications or brain damage, I will be seriously impressed. Being able to safely remove and upgrade these electrodes without shredding the brain in the process will be even more impressive.

This is discussed briefly in the Q&A after the presentation; Neuralink seems very interested in deep brain access, and is considering using ultrasound to locate blood vessels, or alternatively “just making electrodes thin enough that they can puncture blood vessels without damaging them.” Controlling the movement of electrodes this deep in the brain seems difficult to me, but maybe they have some creative tricks up their sleeve.

With all that said, even if Neuralink can acheive this at some point, it’s likely a long way off.

One region I expect could become relevant pretty quickly once Neuralink starts gaining significant human adoption is the Thalamus. The Thalamus is a brain region deep at the center of the brain. All sensory inputs make a stop in the thalamus before being forwarded to the cortex, and all the primary pathways between cortical regions involve bi-directional feedback loops with the thalamus. Its precise functioning is not terribly well-understood (after all, it’s very difficult to get to!), but it is generally believed to play a critical role in attention and consciousness. One hypothesis about its function is that it acts as a kind of switchboard, deciding when to allow communication to happen and when to block it off.

Of course, by virtue of being such a central hub for the brain, damage to the thalamus can often leave the person completely brain dead. The advice given to police and soldiers on which part of the head to shoot at to maximize the odds of killing someone is often centered around either hitting the thalamus or the brain stem (which controls breathing, heartbeat, etc). Because it’s so much smaller and centrally located than the cortex while being highly connected to every part of the cortex, any unintentional damage to it could result in widespread malfunctions amplified across the cortex. Neuralink will have to be extra careful sticking their electrodes here.

If we assume that the model of the thalamus as an attention system is correct, and that the brain doesn’t just decide for absolutely no good reason to route all incoming sensory information through it, we can probably guess that any signals written directly into the cortex by a Neuralink device likely are going to produce sensations that are difficult to ignore no matter how much effort you put into trying to shift your attention elsewhere.

Neuralink has said in the past that they want to ban advertising on any future app store they have; this seems difficult to enforce but I sure hope they find some way to do so! Ads that can completely bypass your brain’s mechanisms to shift your attention away sounds like a dystopian disaster.

This of course isn’t something you’d expect to find out about from animal trials. It sounds like these animal trials are mostly just for a few minutes at a time, and a monkey or pig isn’t going to tell the Neuralink team “hey, these new sensations I’m getting during this test are weird and are messing with my ability to focus”.

At the very least, I expect that Neuralink may find it necessary to create some kind of virtual thalamus for any information they feed into the brain, though doing this well will likely require getting a much deeper understanding of the thalamus, as well as of how the cortex controls it.

Cortical Layers

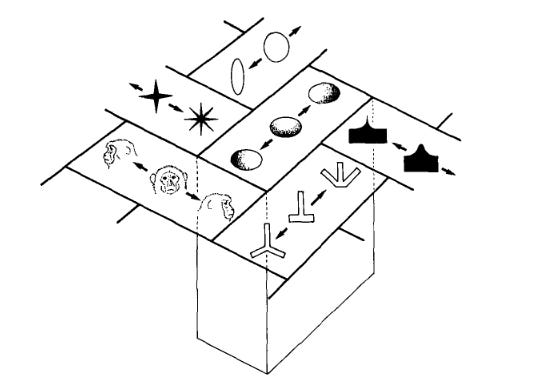

The cortex is not a uniform collection of cells, but rather roughly six different layers that serve different functions.

Layer 4 tends to be the primary input layer, while layer 5 tends to be the output layer. Layer 6 tends to connect to regions like the thalamus and claustrum. Layers 2 and 3 seem to be where complex modeling seems to happen. Layer 1 has very few actual neurons and is mostly just a dense web of long-range connections.

The majority of resolution for a single wire in an electrode is along the vertical dimension, meaning most of the data that Neuralink is reading spans across layers. A Neuralink implant is getting information from all of these layers, getting the state of computations that each specialized layer is performing. However, when reading data out of the cortex with a particular goal in mind, most of the data that you actually care about will likely be confined to just a subset of these layers, meaning that most of the data your implant is reading may be irrelevant.

Another problem comes from writing signals into the brain. If you decide you want to recreate vision and use every electrode as an individual pixel, the exact effect you get might vary from layer to layer. You might get something recognizable as a pixel if you stimulate layers 2, 3, or 4, but stimulating layers 5 or 6 might have very different effects, like modulating the part of the thalamus that would normally be forwarding data from the retina, but presumably isn’t doing much at all in this hypothetical blind patient.

In the vision discussion, a Neuralink engineer mentions how a future vision implant might produce 32,000 pixels. I wouldn’t be surprised if they only get half that in practice.

Cortical Locality and “Conceptual Telepathy”

In the long-term, Neuralink will likely want to start expanding coverage to higher-level brain regions such as the Inferior Temporal cortex (IT).

Such brain regions, sometimes called associative regions, are the kinds of places where the brain forms its models of abstract concepts. If the eventual goal is to get the “conceptual telepathy” that Elon has promised in the past, this is likely an important step.

The problem however is that you can’t just stick some wires into IT and read someone’s thoughts. These associative regions are often large, as they have to store all of your vast, encyclopedic knowledge.

The brain also likes clustering these models, forming a kind of patchwork of brain models. That knowledge Elon wants to access for his conceptual telepathy isn’t in just one place; it’s spread across many different places, and reading from just one region is going to cluster concepts.

Granted, there’s a large degree to which every part of the brain is talking to every other part of the brain, and so it should be theoretically possible to extract some fairly general-purpose conceptual information from some electrodes almost anywhere in IT. Of course, you could probably also reconstruct some of that information from the gustatory cortex if you can read from enough neurons. Like I said, every part of the cortex talks to every other to some extent.

Exactly what data you get though, how much you get, and the quality of that data, is still heavily based on where the implant is. You might be able to reconstruct a rough idea of what someone is thinking from an IT implant, but it might be that 80% of the neurons you can read from just encode detailed models of horse breeds, traumatic childhood memories, and US state capitols. If the implant had been placed a few millimeters to the left, you would have been able to access the small patch of cortex that stores the remnants of what you learned in high school biology class, right next to some knowledge of how to drive a car with a manual transmission.

If you implant this into someone else’s brain, even into the exact same part of the same associative cortical region, the exact map of brain models may look completely different. There do seem to be some regularities and patterns across brains, but which models are in each person’s head and how big/detailed each one is is going to vary heavily depending on a person’s individual experiences and knowledge. You won’t find any models of giraffes in the brain of someone who’s never seen or heard of one before.

Reading this information, and then translating it to someone else’s brain is not something I expect to be even close to being easy.

Another important factor is the Basal Ganglia circuit, which is important in reinforcement learning. Every part of the brain involved in decision making and motor movement forms a feedback loop with this circuit, which helps decide whether or not to make an action based on how much dopamine similar actions have produced in the past. Pulling data directly from IT, which is a brain region that is not part of one of these Basal Ganglia loops, seems like a good way to reduce the amount of actual control people have over what they say through this conceptual telepathy.

If you want any capacity to be careful about what you say to avoid getting yourself into trouble with a stray thought, I expect broadcasting a high-bandwidth feed from IT is the last thing you want. Some portion of the frontal lobe is likely a much better option, though that will likely result in a lower-resolution encoding of these conceptual maps.

Sensory Substitution

In the long term, something like a Neuralink implant is probably the best way to get very high-bandwidth data in and out of the brain. However, with the exception of treating disabilities, the number of disadvantages and difficulties may make such an implant much less appealing for ordinary consumers.

Once Neuralink can offer millions of electrodes across larger portions of the cortex, it might be more compelling. That big of a change in scale would likely require a completely differrent approach however, and I expect that’s likely several decades away.

In the meantime, Sensory Substitution and Sensory Augmentation are perfectly viable alternatives, ones that don’t do questionable things like bypassing the brain’s attention mechanisms, and that can exploit the brain’s pre-existing high-bandwidth inputs.

Streaming data out of the brain is a bit trickier, though I’ve argued before that that’s what VR/AR would be good for - something much more compelling to me than “immersion”. The advantage isn’t much - I’d figure maybe just doubling or tripling bits/second - but that’s not insignificant and is worth the effort in the meantime.

The basic concept behind Sensory Substitution is to digitally replicate some existing sense, such as a video or audio feed, and convert it into another. This is useful for people who have lost or lack one of their sense, and are a much simpler and cheaper option than brain surgery.

One cool project is the vOICe headset, which converts images into audio to help blind people see. Another neat project is the Neosensory Buzz, a wristband that converts audio into vibrations to help the deaf hear. Neither of these options offer the full range and fidelity of the original senses, but it will likely be a long time before Neuralink can offer this either.

Neuralink will be more necessary for those who want to fix their paralysis of course.

For general consumers without disabilities, Sensory Augmentation is another option. Sensory Augmentation is like Sensory Substitution, but where the new sense that is gained is just some arbitrary data, with no need for a sensory organ or brain region to have evolved for it. Instead, the brain’s natural plasticity does all the hard work.

This may sound exotic and far-fetched, but it’s actually pretty mundane. Data visualization is one of the most valuable technologies we have of conveying complex information in our modern technological world, whether that be about transistor scaling, geographical political distributions, or the spread of a pandemic. It’s also a pretty clear example of how valuable it can be to convert arbitrary data into sensory experiences.

Another even more mundane example is writing. Writing has formed the backbone of complex civilization for thousands of years, and works by translating meaning that is normally conveyed through sound into something that can be parsed by the visual system, as well as having other nice properties like the ability to be more easily stored for the future.

Many people report even hearing a voice in their head, dictating text as they read it; this is simply the brain adapting to these new signals and learning to interpret them as effectively a new sense, in much the same way that the brain of an infant learns to interpret signals incoming from the retina as a colorful, 3-dimensional world. There are entirely new worlds of sensory experiences, ways of streaming useful data directly into the brain, and the existence of this voice in your head when you read is proof you don’t need a brain implant to do this; maybe just a screen, a haptic device, or some headphones.

Thanks for reading this article, I hope everyone’s enjoying their holidays or is at least keeping warm and staying safe during this unusually cold winter. With most of my family based in Wisconsin, this has certainly been a pretty interesting holiday season.

I’ll be taking the next couple weeks off from publishing, and will be back to regularly scheduled articles sometime in mid-January. I’m hoping to start improving the quality of the content here throughout 2023 with some better graphics, more technical deep-dives on esoteric subjects, some software projects readers can play with, and some original research.

Merry Christmas!