The Alien Technology in Plain Sight

Let's Reverse Engineer Biology and Deep Learning first

There is a UFO craze going on right now. The Pentagon is even establishing a new department just for investigating sightings.

There’s also a common idea proposed by people like Eric Weinstein and Deep Prasad that if we could just get our hands on some UFO materials, or at least enough declassified military footage of them, we could reverse-engineer whatever strange technologies they are using. What kinds of new laws of physics could we learn? What kinds of new materials and technologies could we build?

I won't comment on the different phenomena people are reporting and discussing, as it's not something I personally spend much time looking into. While I’ve heard people claim that everything from drones to fiber optic cables were developed in military labs inspired by “recovered alien technology”, I’m pretty unconvinced as all of these seem to be very small logical jumps from previously existing technologies and known physics. If they really were the result of UFO technology, they probably would have been invented a few years later by someone without access to such hardware.

Rather, I'd like to point out the wide variety of more mundane examples of "alien technology" in plain sight, which we largely overlook to our detriment. If there truly is UFO technology in the hands of the government, then getting good at reverse-engineering other technologies designed by non-human intelligences is likely to provide us with a far more powerful toolbox for doing so.

What exactly do I mean though by “non-human intelligences”? Simply put, any complex system that is “designed” or adapted to a particular problem or niche by a process not driven by a human brain.

A classic example of this is biology itself; billions of years of evolution have designed some remarkably complex creatures and ecosystems. The human genome is close to a gigabyte in size, and much larger if you include epigenetic markers, etc. Plant genomes are often hundreds of times larger than that.

There isn’t just the complex information processing going on inside of cells, but also the processing done by organs such as the brain.

Another great example of design by nonhuman intelligence is deep learning, designed by gradient descent. There are a great number of problems where humans currently know of no engineerable solution, yet gradient descent can design such solutions with a few hours of GPU compute.

Beyond this, there is also the growing field of generative design; using search algorithms to evolve complex solutions to problems, often producing designs far more geometrically complex and organic-looking than anything a human would have ever thought to design, while also being stronger and lighter-weight than anything that a human would have ever thought to design. Wire routing in CPU design has long been search-based, and there have been decades of work on search-based code optimization that often generates code much faster than what GCC or LLVM can produce, albeit with much longer compilation times.

While these examples are not strictly "alien technology" in the most literal sense, they are in many ways the closest thing the general public has to it.

Human Design and Intellectual Infrastructure

Human design is very often bottlenecked by what we understand. If we lack an understanding of some physical or digital phenomenon that could dramatically optimize our solutions, humans will simply not use it no matter how much of an advantage it provides.

Human knowledge is like a form of infrastructure; like roads through ideaspace. People don’t build their houses miles away from any other human influence. They usually build their houses right along a road, and that road usually connects to other roads until it connects to a highway, railway station, port, or airport. If there is a large area without roads or other infrastructure, you will find few human settlements there, no matter how prosperous such settlements could be.

Infrastructure in ideaspace works much the same.

Certainly this approach has its advantage, allowing us to remember and build upon previous insights that otherwise would be very difficult to independently reproduce. It also means though that we rarely stray far from existing ideas, and venturing far off the beaten path is largely unheard of.

Yet evolution, gradient descent, and search algorithms largely lack these biases. Certainly evolution has a factor of “evolvability”; mechanisms for shuffling, sharing, and preprocessing genes such as sexual reproduction, horizontal gene transfer, and the spliceosome suggest that biology has a toolbox of its own for such infrastructure, albeit perhaps a different flavor compared to human techniques.

Of course, if you navigate a continent by following its interstate system, the sights you see will likely very different from if you navigate it by following its river systems, or by just wandering aimlessly.

Pick a different algorithm for navigating the space, and you may find a very different set of solutions.

The designs produced by these nonhuman intelligences often show little resemblance to human design, and clearly rely on techniques truly unknown to humans. And these techniques are often proven to be much better than the human designs, so perhaps we should actually pay them some attention.

We know that the space of possible technologies and techniques is far larger than what is known to humans - and these examples of nonhuman design regularly pluck ideas from this vast dark unknown, providing us with hard, undeniable evidence of our ignorance. This space of systems that we can infer must exist but that lie beyond human knowledge is what I call Algorithmic Dark Matter.

Reverse Engineering

While there is some great Reverse Engineering (RE) work being done on such “alien technologies”, it is largely not focused on creating new design techniques.

Biology RE seems mostly focused on better understanding how drugs we dump into the body will interact with it, finding new drugs, and verifying that they do what we want.

Neuroscience I think is much more interesting, though still far behind where it could be. Recent advances in compuational neuroscience provide strong hints about the types of data structures and algorithms that the brain uses to compute.

It’s been known for decades that brain activity is mostly digital and very sparse; neurons have no significant variability in signal strength (firing rate is a slightly different story), and so can be modelled as either firing (1) or not firing (0). As neurons can’t fire faster than 250 Hz, and part of the purpose of brain waves seems to be for synchronization, there is at least some extent to which firing patterns can be effectively discretized into time steps as well.

Then throw in sparsity; if you model brain activity as a time sequence of large bit vectors (one bit per neuron), what you will find is that typically only ~1% or less of neurons are firing at any point in time (notable exceptions to this rule are called epilleptic seizures, and are not considered correct brain functioning). This heavily constrains the types of computation going on in the brain, giving us a great deal of information and room to theorize about what the brain is likely doing.

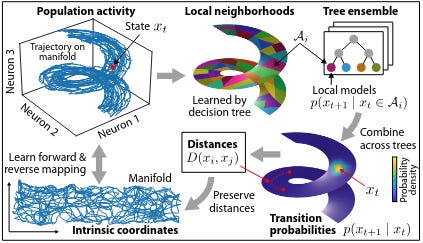

Another powerful source of recent insights have come from various forms of topological analysis, a great and visual explanation of which can be found here.

The basic explanation is that, while N bits provides a space of 2^N possible states, it is exceedingly rare that all 2^N states will actually be used in most types of computation. Instead, a small subset is usually used, often with this subset of points lying on some sort of topological manifold or geometric shape embedded in this space. There is a close relationship between the geometric and topologcal properties of these manifolds and the types of computation that can be efficiently performed on them.

While I will be the first person to explain the ways in which deep learning definitely does not work like the brain, there is at least some overlap in the sense that these same topological analysis techniques being a powerful tool for studying DNNs and how they are able to so easily solve the kinds of problems that humans were never able to figure out without them.

Topology seems to simply be a powerful tool for understanding computation in general (it just hasn’t arrived to standard programming tools yet), and computing systems designed by nonhuman intelligences seem to just do things that are just much more mathematically interesting.

A Promising Future, If You Choose to Pursue It

We may be developing an interesting theoretical foundation for understanding these mundane “alien technologies”, but what are we using it for?

Do we take these techniques that we find DNNs and the brain using and find ways to adapt them to become standard building blocks in everyday software engineering? Does anyone seriously entertain the idea of using deep learning to inspire new human-designed programming paradigms? No. Not at all. This option isn’t considered for a nanosecond!

Instead, the discussion is purely around automating software development and assuming that the reliability problems with trained rather than designed systems will magically disappear someday. I at least have to give some credit to the people using the insights from DNN RE to improving the robustness of such networks, but that’s nowhere near what should be going on.

There’s talk of accelerating automated theorem proving in mathematics with neural networks; allowing computers to find fantastic new heuristics for navigating the space of possible proofs. Cool idea, but wouldn’t it be fascinating if we then tried to understand why these heuristics worked so well, and what metamathematical structure they were mapping? I could see that approach providing far deeper and less obvious insights into foundational mathematics than Gödel could ever have dreamed of. Perhaps such conversations are being had, but I haven’t heard any hint of them.

This attitude of DNNs being simply black boxes that are impossible to understand is purely baseless as already existing research proves beyond a doubt. The intellectual laziness that comes with such a black-box-centric mindset is depressing and unambitious.

And we’re just at the tip of the iceberg here; many of the known techniques are extremely new, many of the biggest discoveries only a couple years old. What techniques will be discovered in the next couple years? What techniques will be developed if we switch our strategy from purely one of understanding these systems and to one of engineering them?

What kinds of incredible new computing paradigms could be available if we could make full use of these techniques? I bet things a trillion times more interesting than anything OOP and Functional Programming advocates could ever dream of.

What kinds of new developer tools could be developed to assist in developing and debugging such code? I bet we could build things a trillion times more interesting and useful than debuggers that haven’t fundamentally changed since the 80s or printf debugging.

What kinds of material technologies could such insights provide? Deep learning is often used to design material systems. The brain designs things all the time. Understanding how such designs are created could inspire new physical systems, or at least illuminate dark parts of the design space.

And if a UFO crash lands in your backyard, what makes you think we have the tools to understand something likely millions of years more technologically advanced than humans? If we can’t even be bothered to reverse engineer the brain, some neural networks, or the protein circuits inside of cells in a way that allows us to actually map the vast Algorithmic Dark Matter these things contain, and then use them to improve human designs, what makes you think you have any chance at making something more interesting than a fancy lawn ornament out of your crashed UFO?