The Bzo Programming Language

I’ve been working on a programming language, called Bzo, for a while. The precise goals have shifted throughout the years, and I’ve very been busy with day jobs and other projects keeping me from really giving this project the attention it deserves. I’ve come to the conclusion that this is something that really deserves full-time attention and resources, so much of the future content on this Substack will soon go into funding this project.

I’d like to discuss some of the ideas behind this language, and I hope you’ll consider a paid subscription to support it.

I’d also like to stress that this is something that I’ve been thinking very deeply about for years, and there are deep influences from math and neuroscience (mostly outside the scope of this introductory article). In all honesty, this article does those ideas very little justice at all, and is purely a surface-level overview of my ideas. If you want to learn more about those deeper, broader ideas, please subscribe.

Pushing the Possibility Frontier

Ideally, every piece of software we build would be well-optimized, thoroughly tested and formally verified, and designed and documented well enough that any programmer could hop right in and get to work.

While these are all nice things in theory, in practice it is very rare that any of this is done. Most software is orders of magnitude slower than it should be, full of bugs, and poorly documented.

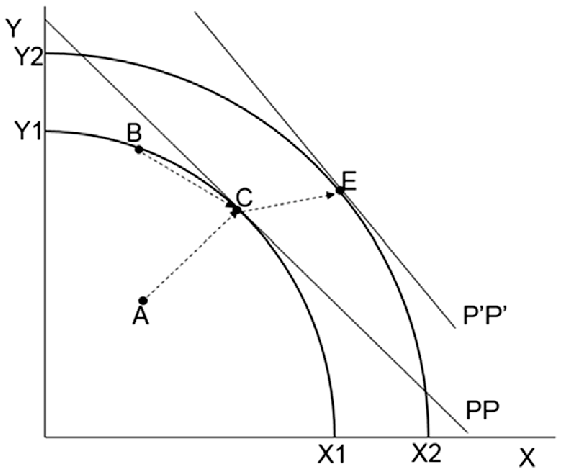

Generally, engineering is viewed as working within a set of constraints and tradeoffs to achieve the best solution possible to a given problem. The resources involved and the tools available for building solutions are themselves constraints though. Changing them allows us to shift the possibility frontier, possibly allowing us to acheive much more ambitious goals.

Tradeoffs, unless directly connected to fundamental natural laws, are often more a product of our tools and strategies than they are a logical inevitability. How close are we really to the fundamental limits of ambition in software development?

The goal of Bzo is to push forward against the possibility frontier and expand the capabilities of programmers. If expanded far enough, we can begin focusing significantly more on these ideals.

The standard, unspoken rules and norms about programming language design and the process of producing software are likely almost exhausted, and so significant progress is likely to only come from breaking and challenging them. It’s unlikely that any future seismic shifts in the possibilities in programming will come from changes to syntax or type systems. That’s a dead end.

I’m interested in breaking out of this box entirely.

Funding

Like it or not, financial sustainability is an important factor in every project. If anything, it’s the most fundamental constraint. Not everyone is able to self-fund serious projects, and once people start depending on your work you may be expected to continue maintaining it regardless of if you still have the resources or interest in self-funding.

Financial sustainability is as important of a feature as any technical aspect of your project.

Open source funding is currently unsustainable. Any large movement that intends to do significant work that never seriously addresses financial sustainability is doomed. If not doomed to complete failure, to exploitation and corruption of the original vision. Funding OSS via tech support incentivizes software that is either low-quality or hard to use. Taking donations for software that people are used to getting for free is a joke. A lack of a viable funding model leads to labor exploitation.

As someone with an unannounced “crypto-adjacent” (i.e., not blockchain) project, I won’t say that nothing in applied cryptography will ever be useful here, but I seriously doubt the current trends of making a ton of useless digital tokens is a good long-term solution. How is this anything other than just donation with extra steps?

Investment is an option, but there can easily be conflicts of interest. These often result in buzzword-heavy but ultimately boring projects that just get acquired and killed by FAANG.

With all that said, what is my plan for funding Bzo?

Step 1: Devlogs on a paid substack. This will continue until the compiler is sufficiently useful for a major release. I will do what I can to make the devlogs interesting, though with the serious R&D I intend to put into this project, this shouldn’t be too hard. Development will be done privately, though source code will eventually be included with releases.

Step 2: Switch to a different funding model for releases. Payments will probably be done on a per-release basis as it makes more sense to make funding proportional to the amount of development work that has occurred rather than the absolute number of users. That said, putting the compiler behind a strict paywall limits the number of users, and any barrier to entry will severely hamper the network effects that are important for making the language valuable. Current ideas:

Pay-What-You-Want, with no minimum. If you want to pay, you can. If not, just proceed to the free download.

Reverse bounty : a fixed goal is set for each release. Once the money is raised, the new update is made free for everyone.

Pay-It-Forward : anyone who pays for an update has the option to purchase free copies for other users. This may even be made compulsory - paying $30 for a new update to your compiler may come with two free gift codes you can give away. This might even help build community.

I like these approaches because they all allow for situations where only a small minority of users may have to pay, while (with the exception of PWYW) also providing me with some leverage to avoid everyone just taking a free option.

Efficiency and Optimization

Ideally, every piece of software we build would be well-optimized, ...

While Moore's Law has definitely led to some incredible improvements in the capabilities of computers, the story hasn't been quite as simple as it's often made out to be. One major issue is that while the sheer volume of operations that a computer can perform has grown enormously, the communication latency between different parts of computers has always been bottlenecked by lightspeed, and thus has improved very little over the decades.

Hardware engineers have gone to great lengths to hide this. Hiding memory latency requires caches, however in order to maintain backward-compatibility with CPUs going back to the 70s and 80s, these caches must be for most intents and purposes invisible. The smoke-and-mirrors to make these caches invisible to the programming model while remaining fast is insanely inefficient. This, among other issues, means that modern CPUs are orders of magnitude less efficient than what should be possible.

As Moore’s Law slows down, there will be enormous pressure to strip out the cache coherency networks, reorder buffers, neural-network branch predictors and prefetchers, and all the other insanity that has crept into CPUs. This will soon be the only way to improve performance, but will come at the cost of changing programming models. They will be replaced with giant arrays of microcores and NUMA memory models. How will we program these?

Modern programming tools and languages are not even remotely prepared for the coming wave of exotic hardware. So what could we do about this?

Compiler as a Toolbox

Compilers are sometimes described as tools for automating and accelerating machine code generation. While this is true in a rather narrow sense, it is worth keeping in mind that language constructs always make some parts of the space of possible machine code programs impractical, if not impossible to reach.

Compilers will sometimes dissolve these constructs for the purpose of optimization, but are rarely good at domain-specific optimizations. In cases where such optimizations are necessary, they are often only achievable by making the code dramatically less readable in order to contort the compiler into producing something even vaguely similar to the machine code the programmer intended.

The purpose of high-level code should not be to ignore the lower-level details entirely, but to focus valuable human attention where it’s needed. Sometimes that’s in the larger-scale functionality, but sometimes it’s in the low-level details. Hiding the low-level parts just forces the programmer to deal with them via unnecessarily complex, error-prone, and indirect methods.

Why not simply treat the compiler as just a library, or as a suite of composable tools for building executables? A build-your-own-compiler approach with a standardized human-readable interface (language syntax). If the programmer wants to build custom optimization or code generation passes, why not let them? In some cases it may be necessary, especially if some weird hardware is involved.

This approach may have the disadvantage of making code a bit harder to read by making assumptions about translation between code and machine code less consistent across different codebases, however this is already a problem we have to some extent with different compilers, and I have some ideas I will mention later on about how Bzo can improve code readability in general.

Correctness

Ideally, every piece of software we build would be … thoroughly tested and formally verified, ...

Computer science is a strange field. In most fields, theory is far beyond practice; we can predict all kinds of stuff that will happen if we travel at 99% the speed of light, but good luck doing it in reality.

In computer science, we have proven that finding infinite loops can be mathematically impossible, then we build programs that actually do a good job at it anyway. We have problems that should take a billion times the lifetime of the universe to solve for a hundred variables, then we have solvers that somehow can handle millions of variables. We don’t know how to explain general-purpose intelligence, yet the computer between your ears handles it just fine.

Fortunately, many problems that concern us deeply with maintaining software (detecting bugs, etc.) fall into the category of “theoretically hard/impossible, but in practice not so bad.” Unfortunately, the “theoretically hard” part seems to scare a lot of people away.

The thing is though that many of these techniques are already being applied to your code! There’s a lot of overlap between the analysis done by compilers to determine which optimizations can be safely applied and the analysis done by static analysis tools that can be used to detect bugs.

Unfortunately, the compiler is a black box. You pass code in, you get error messages (or if you’re lucky, an executable that probably doesn’t work quite right) out, and any magic that goes on in the middle is largely unspecified and inaccessible, regardless of how incredibly valuable it may be to you.

Frankly, the compiler should be as transparent as possible, and there should be language constructs for talking to and even triggering specific analysis passes. Why can’t I ask my compiler to guarantee that a variable is within a specific range? LLVM and GCC already do some pretty extensive range analysis, they should know this already. Why can’t I just ask my compiler to give me an error if it isn’t what I expect it to be?

Programmer Experience

Ideally, every piece of software we build would be … designed and documented well enough that any programmer could hop right in and get to work.

A lot of my family is very mechanically-minded. Car mechanics, engineers, and so on. I’m the odd one out with computers. I’ve tried pushing my little brother toward computers as well, though it didn’t work and he now stains all the furniture with motor oil.

Not for a lack of trying though—he’s done a bit of programming. Yet he prefers working with physical machines because “if something goes wrong, how do you fix it?”

With a physical machine, a problem will often make noises, move in strange ways, or create other easily recognizable problems. There’s still a process of debugging and troubleshooting, but you can rely on a much wider range of senses to very quickly track it down. A machine is a geometric object, and a weird noise can be triangulated to a position in a fraction of a second by the brain’s auditory system. Tracking down a bug in software is never anywhere near this easy.

There’s a vast gap here between software and more natural and intuitive systems. I’d like to start closing that gap.

As mentioned above, the Bzo compiler is intended to be a library of composable tools rather than simply a black box for building code. One advantage of this is that pulling representations of code out for use in custom analysis and visualization tools would become trivial, vastly simplifying the process of tool development. Not only that, but many powerful tools could easily be added in simply as libraries.

It would be great to have code visualization. Why can’t I get a heatmap of features of my code? Where’s the loop-heavy code? Where are all the references to this specific function? Where’s the hottest and coldest code based on the last profile run?

Quickly understanding an unfamiliar codebase (or even just some piece of code you haven’t touched in a while) is a hard, underaddressed problem. Scrolling through a text editor and hopping from file to file to understand a program is the equivalent of looking at every rock on a mountain through a magnifying glass to try to piece together what the mountain looks like from a mile away.

The data science and machine learning communities have all kinds of fancy dimensionality reduction tools like t-SNE and UMAP which are great for visualizing complex, high-dimensional structure. Do I really have to look at all the code up-close instead of zooming out for the bigger picture, or can I just use stuff like this?

And why not get rid of these awful debuggers and printf debugging and replace them with something from the 21st century? GDB came out in 1986, we should have have stuff by now that makes Minority Report look outdated. Would it really be so hard to write data structures to some shared memory buffer and have an external program do real-time visualizations of diagnostic data from inside a program?

And why not go all the way into sensory substitution/augmentation territory? Why can’t I listen to a sonification of my code and use my auditory cortex as a programming coprocessor?

Why stop at just automated tools? Why is all of our human-written documentation just glorified text files? Can we really not dream up any kind of more visual code mapping?

To say there’s a ton of low-hanging fruit here is a gigantic understatement. We need better tools for actually understanding code, not just writing it.

Like I said in the beginning, this is far from a comprehensive explanation of my thoughts. If you’d like more in-depth ideas, as well as to keep track of development, please subscribe. This is a project I’ve had in the back of my mind for years, but have had far too little ability to focus on. I’d like to change that and actually focus seriously on this project. Thank you.

I like your idea for an OS/language. I like your thoughts for the payment models. The problem of payment is something that the open source movement has not solved yet.

EDIT: I remember reading about a theoretical OS called PILOT, it used single character commands and was written up in course notes for a computer science class.

Found you through a comment on Youtube threatening to substack about a iAPX 432 architecture :-)

I used to code Pascal at uni then assembler on micros (Microchip PICs) and then moved to C and stagnated. I have plans to start coding on Arduino or R-Pi and Reprap may be the channel.

In your project once you find a problem that it solves you should be able to get a adequate user base. linux is fringe except it is not, it gets used by almost every internet server farm and millions of embedded devices. Find the use case that needs your OS and it will happen.

Few people are rethinking the fundamentals of programmiing and computation, here's one:

http://ngnghm.github.io/blog/2015/08/02/chapter-1-the-way-houyhnhnms-compute/

The obscure language I use more than anything besides browser and editor is the physically-typed JVM language Frink, free as in beer and entirely the work of Alan Eliasen. Not only useful as a physics and obscure-unit desk calculator, the default arbitrary-precision rational number format, fast math functions such as factoring, optional functional programming, nice data structures, and most of all just the coherence of being a tool that one man with good taste built for his own needs make it a pleasure to use. All the documentation is on one page:

https://frinklang.org/

My table of some of the types of physical units from Frink, which teaches dimensional relations:

https://substack.com/@enonh/p-148207296