The Limitations of Textual Programming

Good books often don’t translate well into good films, and vice-versa. When a good book is made into a good film, it’s often the result of radical adaptations. Each artistic media is itself a language, and the concepts that are most natural to communicate in text don’t line up well with those that are most natural to convey with moving images.

Being great at literary storytelling is to a large extent about mastering the use of words to convey complex meaning, in the same way that being great at filmmaking involves mastering the use of cuts, staging, framing, motion, composition, leading lines, color, and all the other parts of the visual language that our visual cortex implicitly understands in order to convey meaning, often without the viewer even being consciously aware of this language. Exactly what can be portrayed well varies; a book might be great for conveying a character’s complex inner thoughts, whereas a film would be a better medium for stories with a strong spatial component.

One valuable question that the field of computer science should be asking and investigating is if the same principle applies to software engineering. I would argue not only that it does, but that the most promising frontiers of computer science today will only be possible through massive innovations in this direction.

Geometric Constructions

To some extent, this already exists; not everything that is done on a computer has a purely text-based interface.

Take 3D modelling for example. Sure, someone with an astonishing combination of genius and sheer motivation could describe any model entirely with code by composing noise functions, geometric operations, and huge tables of manually-entered floating point constants. Of course, it’s much more reasonable, productive, and sane to just use blender.

There is perhaps a small niche for programming-based modelling for industrial applications, though.

This of course is far from the only example. Videos, audio, images, and most other media files are more often based on primarily visual tools as opposed to textual tools or programming languages, though once again there are some notable exceptions.

There’s a few obvious things that make these types of files different enough from software to necessitate a wholly different approach. The ones that stand out to me are:

Heavy use of continuous variables or other forms of geometric structure

Many fine details that are either subjective or that require fine-tuning that cannot be reduced to an obvious or simple computation, but can be recognized easily by human senses

A relatively low level of complexity or complexity density (think, the number of important human-made decisions per byte)

This isn’t to say that media files can’t be incredibly complex, but rather that the amount of thought that goes into say, a line stroke in an image, or a particular musical note, is not very proportional to the number of bytes required to encode it; there might be a variability, a kind of organic or natural structure to it that might be the product of the fine movements of a human hand, or perhaps voice in the case of audio.

Of course, complexity often still arises, and must be dealt with. A common tool for this problem is the application of constraints; simple guarantees that the software tool can make about how these very continuous variables are tied to each other. A video or audio track for example may consist of many smaller video or audio segments that are layered on top of each other, and often you want to make sure that certain groups of segments start or end at exactly the same time, even if the precise timing down to millisecond is much more subjective.

This recent from Freya Holmér, if you have an hour free to watch it, is a fantastic exploration of geometric curves that are extremely ubiquitous in games and engineering, and the significant role that the constraints between parameters plays in determining which desireable features a curve can and cannot have.

Geometric Computing

I’ve talked a lot before about the connection I see between geometry and computation, and the implications this will have.

NP problems are largely just general-purpose constraint problems over a finite possibility space. They also can be viewed as operations on lattices, which allows us to draw interesting connections to both program correctness and topology.

Granted, just a mere geometric connection to program correctness is perhaps a neat curiosity, but doesn’t seem particularly interesting. Constraints, yes. Some kind of blender-type tool for program correctness? The messiness involved sounds like the exact opposite of what we need!

Neural Computing

I do however see several highly geometric computing problems on the horizon that may benefit significantly here.

The most promising one is neural computing. I’ve written before about some of the fascinating computing techniques that are possible when we take inspiration from the brain. While this offers us many fantastic new tools with fascinating properties, it’s rather difficult to engineer anything with them.

The precise dynamics of something like an HTM model is not encoded primarily in the code, but rather in the relative positions and distances of the neurons in their thousand-dimensional synaptic space. In this sense, it may be capable of some fairly interesting computation but it finds itself in a similar class to deep learning or cellular automata.

We certainly could take the deep learning approach to engineering such systems, of curating training sets and teaching such models to produce the behavior we want. This is unfortunately a pretty crude and indirect tool. Being able to guarantee that a certain type of object is correctly recognized in a certain situation - for example, making sure your autonomous car can recognize pedestrians regardless of the weather - is a well-sought-after goal, and one that is very difficult to achieve! We have few if any tools for manipulating DL models precisely.

Both HTM and deep learning however have a strong geometric component to them however, and ever since the Erlangen Program in the 1870s, there has been a recognition that geometry can be viewed fundamentally as a study of symmetries and contraints. As of last year, there is a fascinating new field of Geometric Deep Learning, which heralds itself as the Erlangen Program of machine learning.

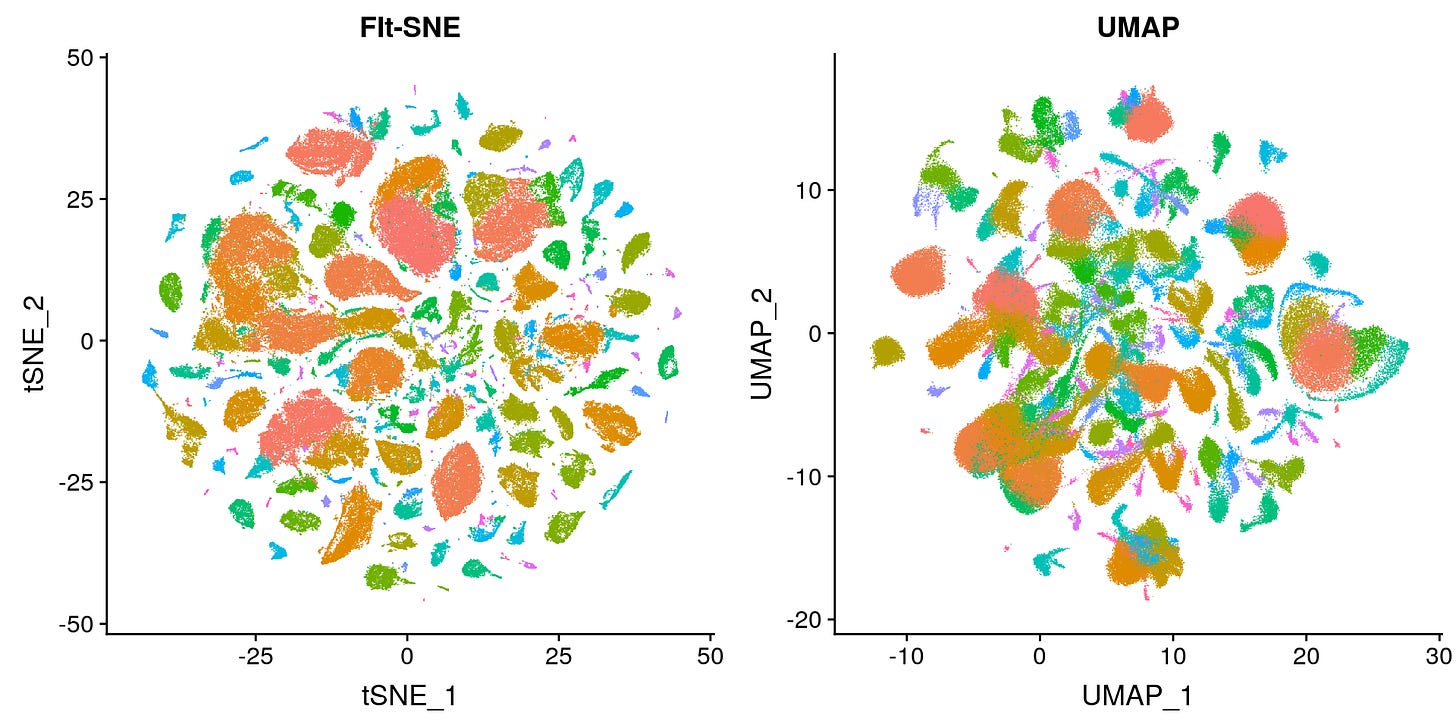

The number of dimensions involved may be pretty large, though often these high-dimensional spaces just embed much more low-dimensional structures that are in reality not far off from human understanding. This is after all why Dimensionality Reduction algorithms have been proving so useful in recent years.

Machine learning is extremely common and profitable now, while simultaneously being an obviously crude and insufficient tool for many of the real engineering problems we wish to use it for. These hints - that dimensionality is not as serious of a problem as it appears, and that there is an enormous amount of geometric structure to these problems that can be studied with rich, existing mathematics - suggest to me that some visual tools might be on the horizon.

In the case of neural computing with systems like HTM, it’s a bit harder to say exactly how much mathematical elegance there is to exploit, but there’s also a great deal of interesting and useful behavior that emerges from a relatively simple combination of features. This is often a strong indicator of rich mathematics!

But need we really focus exclusively on large models? One of the nice properties of HTM is that it can all be implemented with bitwise operations, the most complex of which is a popcount instruction. All of this is orders of magnitude simpler and more efficient than the floating point operations required for DL. Relatively small models could be used without much computational cost at all, and even if a full HTM model is overkill for a problem, the basic properties of sparse bit vectors and similar techniques are fascinating tools that are currently impractical to use.

It’s not clear exactly what could be achieved with small models, but there are many complex decisions that can be at the very least accelerated and optimized with exotic computational tools, as I pointed out a few years ago when I originally wrote Programming in Hyperspace pt 2.

But the tools to make such things properly would be exotic synthesis tools designed around producing complex tables of constants to put into simple bit-shuffling algorithms. Such tools don’t exist right now. These kinds of techniques could be tools that make your average program 10% faster, or they could hold tools that completely transform the way we program with exotic techniques we currently struggle to imagine.

I’m hoping that as I develop these kinds of ideas further, I might be able to write a few articles here demoing some of the various prototypes of such tools. At a minimum there’s a few more months of significant work before I can even meaningfully start such a project though.

Layout

The future of computing hardware is tiled architectures. Machine learning accelerators such as those from Cerebras and Tesla are already using them.

The concept is simple; a giant grid of cores. If you have hundreds, thousands, or even hundreds of thousands of cores on a single chip, the simplest and most obvious way to arrange them is in a big grid. If you want to communicate between cores, just send messages as packets hopping along the grid.

A full, in-depth discussion of tiled architectures is a subject for another time, but the simple story is that parallel computing (the whole point of so many cores) is extremely latency-dependent. Latency is a matter of distance, computing power and memory capacity a matter of area. These are innately geometric terms, and code optimization for such hardware is an obviously geometric problem, to an extent vastly more true than any other problem faced in the optimization of any modern codebase.

Add to that all the aforementioned details about program correctness and analysis having geometric connections, and it’s reasonable to conclude that the dynamic behavior of complex control flow likely will prove to have some fascinating interactions with such tiled hardware, and optimizing code that is more complex than mere large matrix multiplies (any general-purpose code) is going to produce some incredibly fascinating mathematics and some incredibly fascinating new problems for programmers to explore when pushing the limits of their hardware.

I expect hardware engineering and software engineering to converge in many ways, and the emergence of software netlists is something I see as inevitable.

A netlist in hardware engineering is similar to the intermediate representations used in software, but more open-ended. Software IR usually stays in the compiler and is only exported on rare occasions. In hardware engineering, exporting netlists is an ordinary part of the process, as merely having your chip specified as a bunch of Verilog code is only part of the problem. Netlists allow for one program to generate a logical netlist (list of gates and the wires that connect them), and another program (perhaps a custom tool) to produce a physical netlist (actual layout of those gates and wires) before passing that onto different analysis tools or off to a manufacturer.

Once you specify the circuitry in your program, and how all the different modules are wired together, the next step is deciding how those modules are laid out on a silicon die. This is itself a fantastically difficult optimization problem, though optimizing a static layout of logic gates is perhaps a bit more well-understood of a problem than deciding how to efficiently map software tasks to hardware threads in the presence of potentially Turing-complete control flow that decides which tasks are needed when, and how long they run for.

Already there’s a great deal of tooling in the hardware space that goes out of its way to visualize for the programmer what’s going on. This is a necessity whenever the programmer is trying to productively have a hand in such geometric problems.

The interesting work being done in program analysis and Abstract Interpretation is a strong signal that “these things are undecideable, let’s give up” is far too pessimistic, and the hints of connections between the tools used in these analysis techniques and topological spaces suggests there are likely to be deep connections between the two.

A great deal of these interesting connections will be elucidated with mathematics that has yet to be developed, there will likely be many cases where some property of how a program operates has a direct influence on how best to organize its threads on a chip. Until those tools are developed, human intuition will likely be among the best tools, and a graphical aid will serve as a necessary intermediary.

I hope you enjoyed this article. I’ve been a little busy this past week, so the Neuralink article has been delayed again; I hope to get it out before Christmas, after which I will be taking a short break from writing until the new year. I hope everyone has a fantastic holiday season.