Why Do Programmers Listen to Music?

And how we can improve programming with the answer

Why is it that programmers, and really so many modern workers in general, listen to music while they work?

On the surface, this might seem like a pretty simple question. Music obviously helps us focus and improves productivity? These are nice, surface-level explanations, but if we dig a little deeper, another question arises - why does listening to music help with these things?

Ancient humans certainly weren't running around hunting mammoths with headphones on, despite such hunting clearly being a complex and difficult process. If they had any concern for stealth, they probably weren’t singing much either. However, if we look at modern hunter-gatherers, such as the ones in the YouTube video below, I believe we can get a hint at a deeper and much more interesting answer. The video's host at one point describes their hunting process:

“Every little indent in the sand, every little chirp in the woods, they can know and they can read. There’s this constant information flowing in from all sides.”

I'd like to propose an alternate, and deeper explanation as to why listening to music makes us more productive - the brain wants highly multisensory experiences.

Think for a moment about how a human brain may evolve in such an environment. If survival requires extreme attention to every little detail in your environment, across every different sensory modality, we may expect that the brain would evolve to find unease in one being underutilized.

The Inca civilization from South America had a similar belief - that the deepest forms of knowledge were necessarily multisensory. Their civilization, rather than relying on purely visual writing systems like nearly every other civilization, used an elaborate system of knotted strings (called Khipus) to encode records and text. Spanish efforts to force people to abandon khipus in favor of more traditional writing were deeply unpopular, with Andean people asserting that the combination of visual and tactile information made khipus a superior system.

Yet, much work that modern humans do - especially a lot of desk work like writing and programming - are almost purely visual. The auditory parts of the brain are left out entirely, and the tactile components have provide very little useful information beyond how you’re hitting the keys.

It would make sense that the brain would demand that we give the auditory cortex something to do. Some level of anxiety makes sense. This likely goes for other brain regions as well.

However, is the work that we are giving it something that is actually useful? It seems that by listening to music we’re distracting the auditory cortex rather than giving it something useful to do. We’re giving it highly structured data to trick it into thinking it’s getting useful information, despite having no actually useful content to the work we are doing. From this perspective, music is the auditory equivalent of junk food.

This is a real shame; after all, programming is full of a lot of complex problem-solving, and it programmer productivity would benefit greatly from being able to devote more brain power to the task. Sure, we can throw more programmers at the problem, but the bandwidth of communication between humans is very low compared to the billions of signals that bounce around the brain every second.

Sensory Substitution

The brain is an extremely flexible learning system. The portrayal of the cortex as a mosaic of brain regions, each with a specialized function, is a complete fiction. Zoom in a little further and you’ll find the same neuron types and circuits across any two parts of the cortex you look at, with almost no variation.

The main difference1 between the auditory cortex and the visual cortex is that the auditory cortex gets its inputs from the cochlea, and the visual cortex gets its inputs from the retina. It’s been accepted for decades that the cortex is composed of modular, repeating circuits called cortical columns. Evolution has no need to micromanage and redesign complex circuitry to add new functions, just add new general-purpose cortical columns and link them up.

This has led to the idea of Sensory Substitution - you can change the sensory modality of a brain region to a different one simply by changing its inputs. Convert video into audio, and you can learn to see with your ears. As crazy as this sounds, it actually works pretty well. And why stop there? Why not feed in data you have no natural sense for? This is Sensory Augmentation.

And frankly this shouldn’t be surprising - after all, data visualization is just a very mundane form of sensory augmentation.

With enough familiarity, it even changes the qualia perceived by the brain. Do you hear a voice in your head while reading? Most people do. Keep in mind that your brain never evolved to read text, and that voice in your head is just the way that this hundred-million-year-old biological learning algorithm interprets the written word.

So if we can stream linguistic information into the eyes via text, and then repurpose that 5000-year-old technology for modern computer code, can we do the same with audio? What does code sound like?

The Sound of Code

There is no format for data that fully reveals all its secrets and structure.

The textual format of code makes some details explicit, but leaves many others implicit. Ultimately, much of the hard work of reading code involves the interpretation and mental extraction of relevant information.

Documentation rots. Weird programming constructs and abstractions might sometimes help a little, but often do little to mitigate complexity that isn’t already close to surface-level.

If we take the naive approach to code sonification (sonification is the auditory equivalent of visualization), and just feed our code into some kind of text-to-speech program we can listen to, it’s unlikely that it will bear much fruit. After all, this is really just an inferior way to experience code - just the text, much of the meaning stored in indentation and alignment of text is lost.

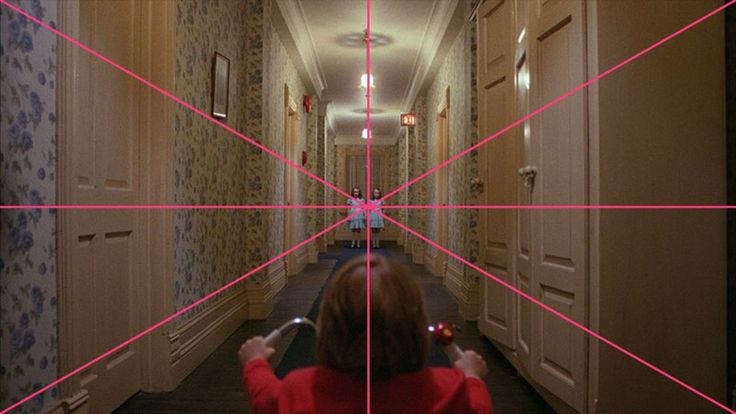

It’s also worth remembering that the human visual system isn’t a static video stream - your eyes are constantly jumping around. A seemingly static image can be surprisingly interactive. This is an extremely valuable insight that visual artists discovered hundreds if not thousands of years ago and use almost religiously, but that fields like computer vision haven’t really caught up to yet.

Further, we should make sure that listening to our code actually adds something. There’s a lot of technology that exists as a solution seeking a problem, rather than providing real value. We should prioritize sonification that actually reduces programmer effort, for example by focusing on structure that the programmer would otherwise need to infer manually.

The field of graphical programming exists in part because of people’s instinct that there is potential value in being able to zoom out of code to see larger-scale structure. This instinct is obviously correct. Whether or not any real progress has been made by repeatedly using the exact same couple of failed approaches a billion times is a completely different subject.

Zooming out is, in one sense, prioritizing aggregated factors over fine-grained variables, such as averages over individual values. This doesn’t necessarily mean reducing everything down to just a single value though, just reducing resolution. After all, reducing the resolution of an image is mostly just a matter of averaging together groups of adjacent pixels to get a smaller number of pixels.

So for code sonification, a good place to start would probably be in getting a rough, low-resolution understanding of a large amount of code as a useful alternative to digging through large amounts of code manually.

Audio is really just a changing distribution of frequencies. We can view a sound as essentially a 2D image where one dimension is pitch and the other is time.2 There’s a little bit more to it than that, but that’s a good place to start. If we can render something to such an image, we can convert it to a sound.

One simple approach might be to treat the program as some sort of graph, and to perform a breadth-first search, playing notes based on which nodes are found, with the time dimension encoding the distance from the origin. Programs are already usually represented as Abstract Syntax Trees, and function calls are really just connections from one tree to another. There’s a lot of ways in which you can do this, but this might be a pretty good way to get a rough idea of the contents of large pieces of code.

I’d be surprised if Locality-Sensitive Hashing wasn’t another effective approach, as it’s pretty good for taking large amounts of data and compacting them down to vectors, while producing similar vectors given similar data. With an approach like this, if two pieces of code sound similar, they probably have a lot of similar structure, or call a lot of similar functions.

It would be neat to be able to just scroll through the code in an editor and, like hearing your echoing footsteps hint at the shape of the empty space around you, listen to the echoes of the code you’re looking at and how it relates to code on a much larger scale.

It would also be nice to see other types of feedback. I was excited to see what would come out of the Nintendo Switch’s “HD Rumble”, but it unfortunately sounds as though it’s almost never used. I’ve thought about making some kind of wearable haptic feedback device to wear on the forearm. That might be a good side project to mess around with at some point. Maybe after some more paid subscriptions.

This has been a free article for the Bzogramming newsletter, a newsletter mostly about an experimental new programming language that I’m building called Bzo. It covers the development, as well as many of the ideas that influence many of the more interesting ideas going into the language.

If you’re interested in supporting serious innovation in development tooling beyond the boring old syntax and type system tweaks, I’d strongly recommend sharing this article and dropping a paid subscription. Thank you.

In primates (including humans), there is one minor difference between the visual cortex and other cortical regions - a couple extra sub-layers in layer IV (internal granular layer), which are used to keep color information separate from luminosity information for one extra step. Seeing as this a fairly minor difference that is nonexistent in non-primates that are able to still see just fine, it seems pretty safe to say this isn’t some groundbreaking exception.

Technically speaking, it’s not a perfectly regular grid though. Lower frequencies have a lower resolution than higher frequencies. The “pixel” density in this case is proportional to the frequency.